Stereo Vision Systems

Unlock 3D depth perception for your Autonomous Guided Vehicles (AGVs) by mimicking human binocular vision. Stereo systems provide cost-effective, high-resolution spatial awareness essential for navigation in unstructured environments.

Core Concepts

Binocular Baseline

The physical distance between the two camera sensors. A wider baseline increases depth accuracy at long ranges but increases the minimum sensing distance.

Disparity Mapping

The calculation of the pixel shift of an object between the left and right images. Higher disparity indicates objects are closer to the robot.

Point Cloud Generation

Converting disparity maps into a 3D set of data points (X, Y, Z coordinates) representing the external surface of objects in the robot's path.

Epipolar Geometry

The geometric constraint used to simplify the search for matching points between images from 2D search to 1D search along epipolar lines.

Active Stereo

Systems that project a textured pattern (IR) onto the scene to assist in matching points on featureless surfaces like blank walls or polished floors.

Calibration

The critical process of determining intrinsic (focal length, center) and extrinsic (rotation, translation) parameters to ensure measurement accuracy.

How It Works: Triangulation

Stereo vision operates on the principle of triangulation. Just as your brain processes slight differences between what your left and right eyes see to perceive depth, a stereo camera system captures two simultaneous images from slightly different vantage points.

The onboard processor identifies identical feature points in both images. By measuring the horizontal distance (disparity) between these points and knowing the camera's focal length and baseline width, the system calculates the Z-depth for every pixel.

Unlike LiDAR which scans using laser time-of-flight, stereo vision provides dense depth maps that are perfectly aligned with color (RGB) data, allowing AI models to not only know where an object is, but what it is.

Real-World Applications

Logistics & Warehousing

Used for pallet pocket detection and obstacle avoidance. Stereo cameras allow forklifts to align perfectly with racking systems even when lighting conditions vary.

Last-Mile Delivery

Sidewalk robots rely on stereo vision to detect curbs, pedestrians, and uneven terrain. Passive stereo works exceptionally well in bright sunlight where IR sensors may fail.

Agricultural Robotics

In crop fields, texture is abundant but geometry is complex. Stereo vision helps robots navigate between crop rows and identify ripe produce for automated harvesting.

Service & Hospitality

Wait-staff robots use stereo vision to detect tables, chairs, and moving people in dynamic environments, ensuring safe interaction without physical contact.

Frequently Asked Questions

What is the main advantage of Stereo Vision over LiDAR for AGVs?

The primary advantage is cost and semantic understanding. Stereo cameras are generally significantly cheaper than 3D LiDAR sensors and provide rich color data aligned with depth, allowing for object classification (e.g., distinguishing between a person and a cardboard box).

Does Stereo Vision work in complete darkness?

Standard passive stereo vision requires ambient light to see features. However, "Active Stereo" systems project an infrared pattern onto the scene, allowing them to function in total darkness by creating artificial texture for the cameras to read.

How does the "baseline" affect the robot's performance?

The baseline is the distance between the two lenses. A wider baseline provides better depth accuracy at longer distances but increases the "blind spot" directly in front of the robot. A narrow baseline allows for close-range inspection but loses precision further away.

What are the computational requirements?

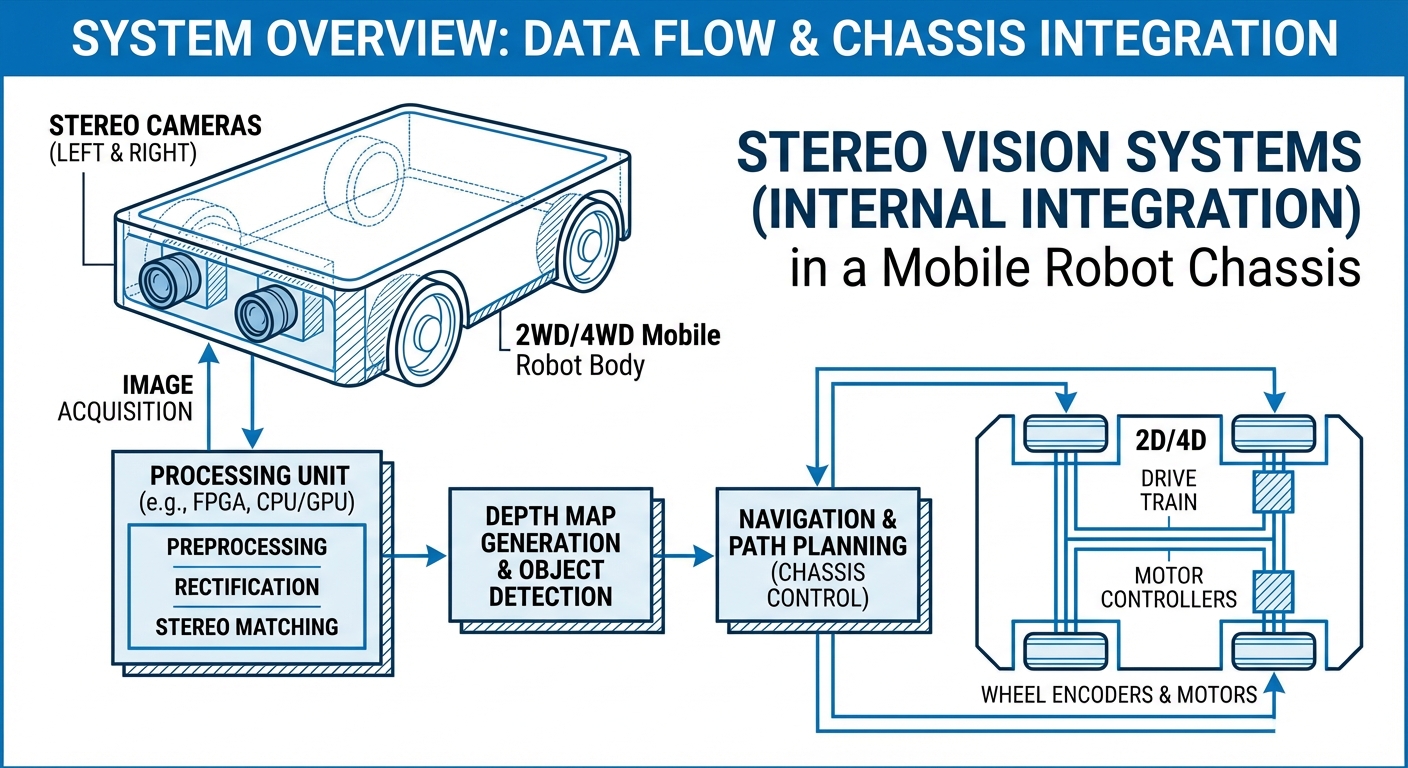

Stereo matching is computationally expensive. While modern CPUs can handle it, most industrial AGV applications use cameras with onboard FPGAs or connect to GPUs (like NVIDIA Jetson) to calculate disparity maps in real-time without latency.

Why does stereo vision struggle with white walls?

Stereo algorithms rely on matching unique "features" (edges, textures, corners) between the left and right images. A plain white wall has no unique features to match, causing the depth calculation to fail unless an active pattern projector is used.

How often does a stereo system need calibration?

Ideally, factory calibration lasts a long time, but mechanical shock or vibration (common in AGVs) can misalign lenses. Robust industrial stereo cameras are built on rigid metal frames to minimize this, but recalibration may be needed after collisions.

What is the typical range of a stereo camera?

This depends on the baseline and resolution. A small robotics camera might work from 0.2m to 5m. Larger baseline systems for outdoor rovers can accurately measure depth up to 20m or 30m, though error increases quadratically with distance.

Can stereo vision be used for SLAM?

Yes, Visual SLAM (vSLAM) is a common application. Stereo cameras provide the 3D geometry needed to map an environment while simultaneously tracking the robot's movement through that map, often referred to as Stereo-Inertial Odometry when combined with IMUs.

How does sunlight affect stereo vision?

Passive stereo vision is generally superior to Time-of-Flight or structured light sensors in direct sunlight, as the sun's IR radiation does not wash out the image data. This makes stereo the preferred choice for outdoor delivery robots.

Is software integration difficult?

Most modern stereo cameras come with SDKs for C++ and Python, and have native support in ROS (Robot Operating System) and ROS2. Packages like `stereo_image_proc` make generating point clouds relatively plug-and-play.