Sim-to-Real Transfer

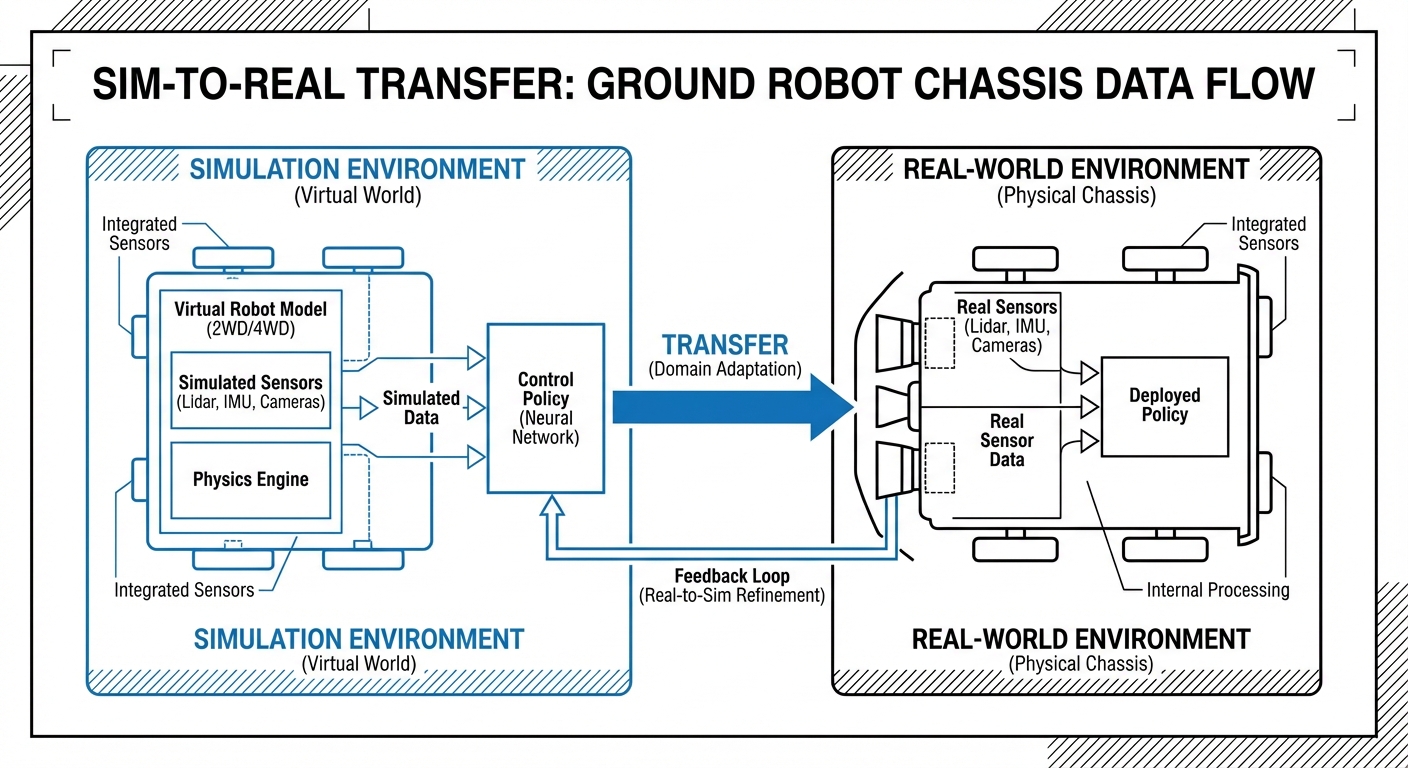

Bridge the "Reality Gap" by training autonomous mobile robots (AGVs) in high-fidelity simulations before deploying them to physical environments. This critical process ensures safety, reduces hardware wear, and accelerates the learning of complex navigation policies.

Core Concepts

The Reality Gap

The subtle discrepancies between physics engines and the real world (friction, sensor noise, latency) that cause simulated policies to fail on physical hardware.

Domain Randomization

Deliberately varying simulation parameters (colors, lighting, friction coefficients) so the real world appears to the robot as just another variation of the simulation.

System Identification

The process of tuning the simulation's mathematical model to match the physical AGV's properties, such as mass distribution, wheel slippage, and motor torque curves.

Visual Adaptation

Using techniques like GANs (Generative Adversarial Networks) to translate synthetic simulation images into realistic-looking video feeds for vision-based navigation.

Sensor Noise Injection

Adding probabilistic noise to simulated LIDAR and odometry data to prevent the robot from over-relying on "perfect" data that doesn't exist in the real world.

Zero-Shot Transfer

The ultimate goal where a policy trained entirely in simulation performs successfully on the first attempt in the real world without any additional fine-tuning.

How It Works

The Sim-to-Real pipeline begins by creating a "Digital Twin"—a physics-accurate virtual representation of both the AGV and its operating environment (e.g., a warehouse floor).

Inside this simulation, Reinforcement Learning (RL) agents control the robot, attempting millions of navigation tasks per hour. The simulation environment is aggressively randomized (changing floor friction, obstacle mass, and lighting) to force the neural network to learn robust generalized behaviors rather than memorizing a specific map.

Once the policy converges, the trained neural network is extracted and deployed onto the physical AGV's onboard computer. Because the network has seen "worse" conditions in the simulation than in reality, it navigates the physical world with high confidence and stability.

Real-World Applications

High-Speed Logistics

Training AGVs to handle cornering forces at high speeds in warehouses without tipping over. Simulation allows testing limits that would be dangerous to attempt physically.

Unstructured Environments

Deploying robots in construction zones or hospitals where obstacles are dynamic and unpredictable. Sim-to-Real prepares the robot for "edge cases" like sudden moving crowds.

Outdoor Terrain Navigation

Preparing delivery robots for varying pavement textures, wet surfaces, and uneven ground by simulating millions of surface friction interactions.

Multi-Agent Swarms

Coordinating fleets of hundreds of robots. Simulation is the only feasible way to train traffic management protocols to avoid deadlocks before deploying a fleet.

Frequently Asked Questions

Why can't we just train robots in the real world?

Training directly on hardware is slow, expensive, and dangerous. Reinforcement learning often requires millions of trial-and-error episodes; on physical hardware, this would take years and result in significant mechanical damage due to collisions during the early learning phases.

What is the "Reality Gap" and why is it a problem?

The Reality Gap refers to the inaccuracies between the simulation and the physical world. If a simulator doesn't perfectly model motor backlash, friction, or sensor noise, a robot might learn a strategy that exploits these simulation bugs, leading to failure when deployed on real hardware.

Does Sim-to-Real transfer require specific hardware on the AGV?

Generally, no specific actuators are required, but the onboard compute (like an NVIDIA Jetson or similar IPC) must be powerful enough to run the neural network inference in real-time. The sensors (LIDAR, Cameras) used in reality must match the specifications modeled in the simulation.

What simulators are commonly used for AGVs?

Popular high-fidelity simulators include NVIDIA Isaac Sim, Gazebo, MuJoCo, and PyBullet. Isaac Sim is gaining traction in industrial robotics due to its photorealistic rendering and GPU-accelerated physics, which speeds up training times.

How does Domain Randomization help?

By randomizing parameters like floor friction, object mass, and lighting colors in the simulation, the AI stops relying on specific environmental cues. It learns a "robust" policy that treats the real world as just another variation of the simulation, significantly improving transfer success rates.

Is Sim-to-Real used for SLAM (Simultaneous Localization and Mapping)?

Yes, it is increasingly used to tune SLAM algorithms. Simulation allows developers to test loop-closure and relocalization in challenging scenarios (like long corridors or dynamic warehouses) without physically setting up those environments.

What is "Zero-Shot" transfer?

Zero-Shot transfer occurs when a model trained in simulation works immediately on the physical robot without any further training or fine-tuning in the real world. This is the holy grail of Sim-to-Real research and saves immense deployment time.

How do you model sensor noise effectively?

We inject Gaussian noise, drift, and drop-out errors into the simulated sensor feeds. For example, we might randomly delete 5% of LIDAR points or blur camera frames to force the robot to navigate safely even when sensor data is imperfect.

Can Sim-to-Real handle complex interaction tasks?

Yes, beyond simple navigation, it is used for mobile manipulation (AGVs with arms). It helps robots learn how to open doors, push carts, or interact with varying loads by simulating the contact physics involved in these tasks.

How accurate does the "Digital Twin" need to be?

Surprisingly, it doesn't need to be visually perfect, but it needs to be physically representative. With aggressive Domain Randomization, a robot can train in a crude-looking environment and still succeed in reality, provided the underlying physics (collision, mass) are modeled correctly.

What are the cost benefits of this approach?

While there is an upfront cost to building the simulation environment, the long-term savings are massive. It eliminates physical prototype damage, reduces the need for large physical testing facilities, and allows for 24/7 parallel training of thousands of virtual robots.

How do we verify safety before real-world deployment?

Verification is done through a "Safety Shield" approach. Even if the neural network outputs a command, a hard-coded safety layer (based on collision avoidance kinematics) validates the command. Additionally, the policy is tested in "worst-case" simulation scenarios before hardware transfer.