Sensor Fusion

Unlock precise navigation and enhanced safety for your mobile robot fleet. Sensor fusion combines data from LiDAR, cameras, and IMUs to create a unified, robust understanding of the environment that outperforms any single sensor.

Core Concepts

Redundancy

Ensures system reliability by using multiple sensors to measure the same physical property. If one sensor fails or is obstructed, others maintain the data stream for safe operation.

Complementarity

Combines sensors with different strengths. For example, LiDAR provides precise depth perception, while RGB cameras provide semantic understanding of signs and floor markings.

Kalman Filtering

The mathematical standard for fusion. It estimates the state of the robot by predicting future states and correcting them based on noisy sensor measurements in real-time.

Time Synchronization

Aligning timestamps from disparate hardware is critical. A millisecond delay between a moving camera and wheel encoder can cause significant localization errors at high speeds.

Extrinsic Calibration

Defining the rigid transformation between sensors. Knowing exactly where the camera sits relative to the LiDAR is required to map pixels to 3D point clouds accurately.

Localization & Mapping

Fusion is the engine behind SLAM (Simultaneous Localization and Mapping). It prevents "drift" in odometry by constantly correcting position using visual or geometric features.

How It Works

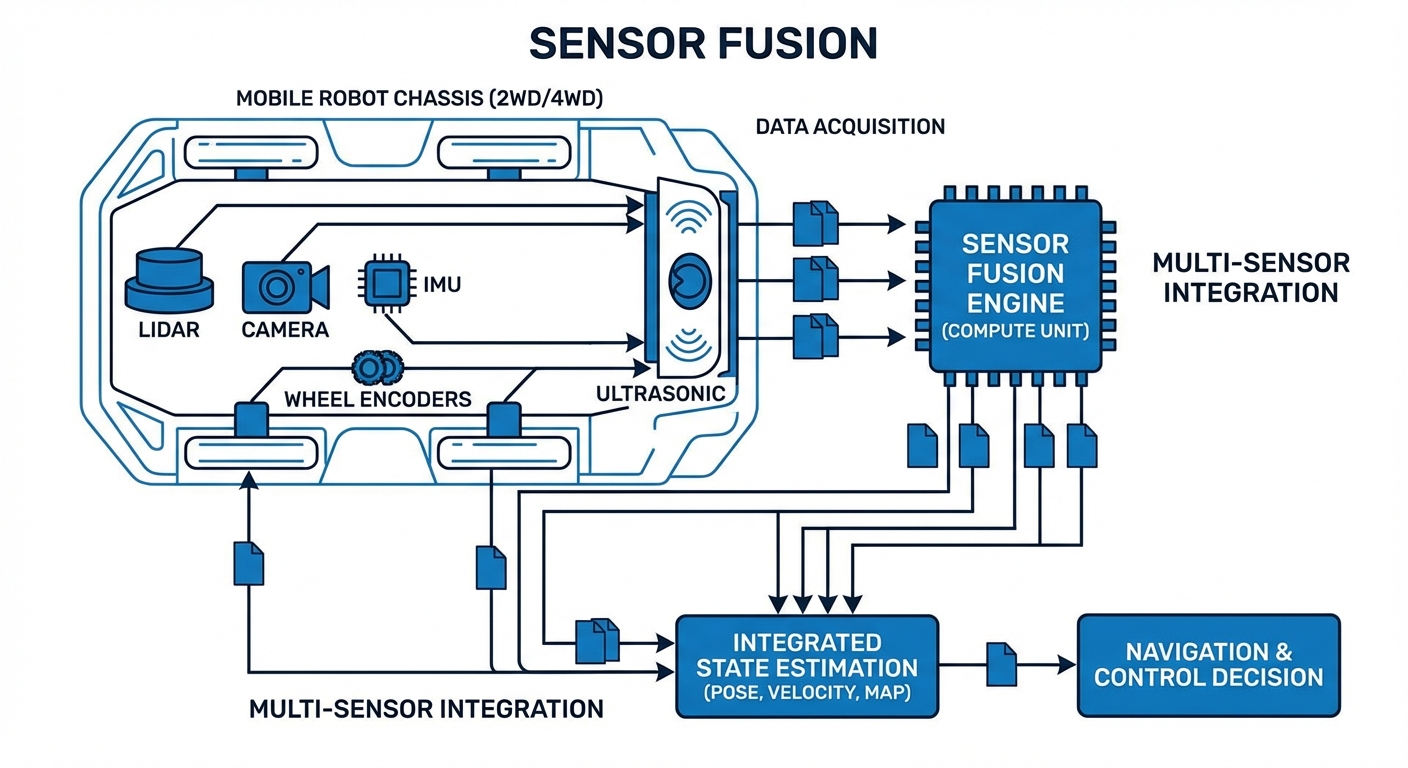

Sensor fusion is not just about layering data; it is a probabilistic process of state estimation. The system begins by acquiring raw data streams: point clouds from LiDAR, RGB frames from cameras, and acceleration vectors from the IMU.

These streams undergo Preprocessing to remove noise and align timestamps. Next, the Data Association step matches features observed by different sensors—confirming that the obstacle seen by the camera is the same object detected by the radar.

Finally, a fusion algorithm (typically an Extended Kalman Filter or Particle Filter) merges these inputs. It weighs the data based on the known variance (trustworthiness) of each sensor, outputting a highly accurate "Best Estimate" of the robot's pose and velocity.

Real-World Applications

Warehouse Automation (AGVs)

In dynamic fulfillment centers, robots fuse wheel odometry with 2D LiDAR to distinguish between permanent racking and moving humans, allowing for high-speed operation in shared spaces without safety violations.

Healthcare Logistics (AMRs)

Delivery robots in hospitals utilize visual-inertial fusion to navigate long, featureless corridors where LiDAR might slip, ensuring medication and lab samples reach their destination autonomously.

Outdoor Material Handling

Yard tractors operating in rain or fog fuse Radar data (which penetrates weather) with LiDAR (which provides shape) to maintain operation when optical sensors are compromised by environmental conditions.

Precision Docking

For charging or conveyor handoffs, robots switch to a tight-fusion mode using short-range IR sensors and fine-motor encoders to achieve millimeter-level alignment accuracy.

Frequently Asked Questions

Why is sensor fusion necessary for modern AGVs?

Single sensors have inherent physical limitations. LiDAR can be confused by glass, cameras struggle in low light, and wheel encoders drift over time due to slippage. Fusion mitigates these individual failure points, creating a redundant system that ensures the robot knows where it is and what is around it, even if one sensor fails.

What is the difference between Loose and Tight coupling?

Loose coupling processes data from each sensor independently (e.g., getting a position from GPS and a position from LiDAR) before blending the results. Tight coupling fuses the raw data (e.g., GPS satellite signals and LiDAR point clouds) directly within the filter. Tight coupling is more computationally intensive but offers better accuracy and robustness in challenging environments.

Does sensor fusion increase the hardware cost significantly?

While adding sensors increases the Bill of Materials (BOM), sensor fusion can actually lower total system costs. By fusing data from cheaper, lower-fidelity sensors (like low-cost IMUs and standard cameras), you can often achieve performance comparable to expensive, high-end standalone navigation systems.

What computing power is required for real-time fusion?

It depends on the complexity. Basic EKF (Extended Kalman Filter) fusion for odometry runs easily on microcontrollers. However, fusing high-resolution 3D LiDAR point clouds with 4K video streams for SLAM requires powerful edge computing units like an NVIDIA Jetson or an industrial PC with GPU acceleration.

How do you handle time synchronization between sensors?

Precise timestamping is critical. Systems typically use hardware triggering or protocols like PTP (Precision Time Protocol) to ensure all sensors capture data simultaneously. In software, buffers are used to align data packets based on timestamps before they are fed into the fusion algorithm to prevent "ghosting" effects.

What happens if one sensor provides garbage data?

Robust fusion algorithms include integrity checks (such as Mahalanobis distance) to reject outliers. If a sensor's reading deviates statistically significantly from the model's prediction, the algorithm lowers its "trust" weight or ignores it entirely to prevent the corrupted data from affecting the robot's trajectory.

Is Kalman Filtering the only method used?

While the Kalman Filter (and its variants EKF/UKF) is the industry workhorse for state estimation, other methods exist. Particle Filters are popular for localization in large maps (Monte Carlo Localization). Recently, Graph-based optimization and End-to-End Deep Learning approaches are gaining traction for complex SLAM tasks.

How often does the system need calibration?

Intrinsic calibration (sensor lens/internal properties) is usually done once. Extrinsic calibration (position of sensors relative to each other) must be checked if the robot suffers a collision or significant vibration. Some advanced systems now feature "online calibration" that fine-tunes parameters dynamically during operation.

Can sensor fusion help with outdoor navigation?

Absolutely. Outdoor environments are unstructured and weather-dependent. Fusing GNSS (GPS) with IMU and Wheel Odometry (Dead Reckoning) allows the robot to bridge gaps where satellite signals are blocked by buildings or trees, maintaining accurate localization in "urban canyons."

What is the difference between low-level and high-level fusion?

Low-level fusion combines raw data (e.g., pixel-level fusion of thermal and RGB images). High-level fusion combines the *decisions* or *objects* detected by individual sensors (e.g., "Camera A sees a person" + "LiDAR B sees an obstacle" = "Confirmed Person"). High-level requires less bandwidth but may lose subtle details.

How does IMU integration improve navigation?

Inertial Measurement Units (IMUs) operate at very high frequencies (100Hz+) compared to LiDAR or Cameras (10-30Hz). Fusion uses the IMU to fill the gaps between visual frames, providing smooth, continuous velocity and orientation updates that are crucial for stabilizing control loops.