RGB-D Cameras

Unlock true spatial awareness for your mobile robots by combining high-resolution color imagery with precise per-pixel depth data. RGB-D sensors enable AGVs to navigate complex environments, recognize objects, and operate safely alongside humans.

Core Concepts

Depth Sensing

Unlike standard cameras, RGB-D sensors measure the distance to every pixel in the frame. This creates a depth map that tells the robot exactly how far away obstacles are.

Point Clouds

The combination of RGB and Depth data generates a 3D Point Cloud. This vector data represents the physical environment geometry in real-time coordinates.

Sensor Fusion

RGB-D cameras align color textures with depth topology. This allows AI models to not just see "an object" at 2 meters, but identify "a wooden pallet" specifically.

Structured Light

Many RGB-D cameras project an invisible infrared pattern onto the scene. The camera calculates depth by analyzing how this pattern deforms over object surfaces.

Time of Flight (ToF)

ToF sensors emit light pulses and measure the time they take to bounce back. This provides highly accurate depth readings, even in changing lighting conditions.

Visual SLAM

RGB-D data is critical for vSLAM (Simultaneous Localization and Mapping), allowing robots to build maps of unknown areas and track their location within them.

How It Works

The term "RGB-D" refers to the output channels: Red, Green, Blue, and Depth. While a standard camera flattens the 3D world into a 2D image, an RGB-D camera preserves the Z-axis (distance) information.

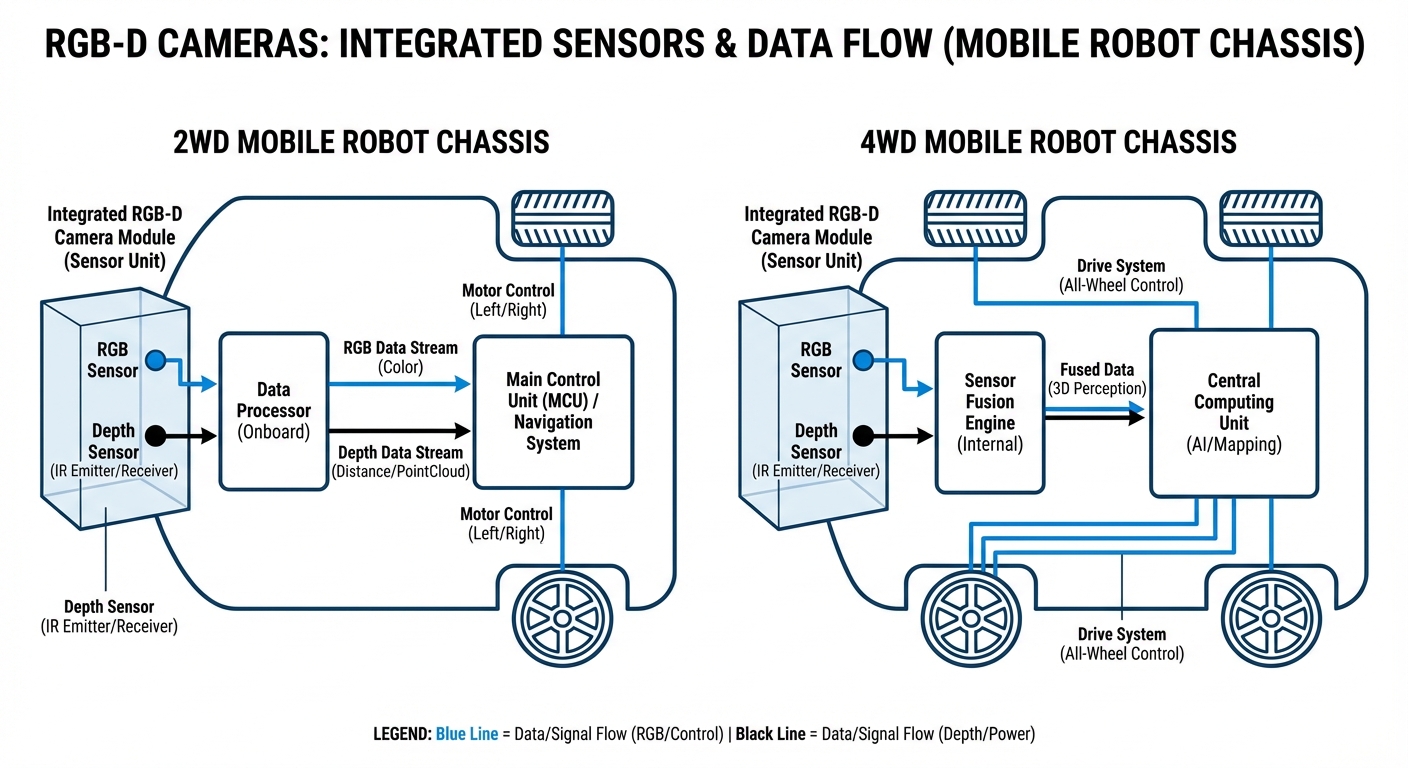

In a typical mobile robot setup, the RGB sensor captures the visual appearance of the environment, which is useful for human operators and AI object recognition (e.g., distinguishing between a stop sign and a red box). Simultaneously, the depth sensor—using stereoscopy, structured light, or Time-of-Flight—generates a grayscale heat map where brightness correlates to distance.

The onboard processor synchronizes these streams. This allows the robot's navigation stack to project obstacles into a local costmap, ensuring the AGV can plan a path around a chair or a person in real-time, rather than relying solely on a pre-mapped static floor plan.

Real-World Applications

Dynamic Obstacle Avoidance

LiDAR scans a single 2D plane, often missing overhanging objects like tables or low forklifts forks. RGB-D cameras provide volumetric sensing to detect obstacles at any height, preventing collisions with hanging cables or shelves.

Pallet Pocket Detection

Autonomous forklifts use RGB-D to precisely locate pallet pockets. The depth data confirms the orientation and structural integrity of the pallet, allowing for automated engagement without human alignment.

Semantic Scene Understanding

In crowded warehouses, robots need to know the difference between a temporary obstruction (a person) and a permanent one (a wall). RGB-D data feeds Deep Learning models to classify objects and make smarter path-planning decisions.

Docking & High-Precision Alignment

When an AGV approaches a charging station or conveyor belt, GPS and wheel odometry aren't accurate enough. RGB-D cameras track visual markers (fiducials) or surface geometry to align the robot within millimeter accuracy.

Frequently Asked Questions

What is the main difference between RGB-D cameras and LiDAR?

LiDAR uses laser pulses to create a very precise, long-range 2D or 3D map, but it is often expensive and lacks color information. RGB-D cameras provide dense 3D data and color texture at a lower cost, but generally have a shorter effective range and can be sensitive to lighting conditions.

How does lighting affect RGB-D performance?

It depends on the technology. Stereo cameras need ambient light to see textures, while Structured Light cameras can struggle in direct sunlight because the sun washes out the projected IR pattern. Time-of-Flight (ToF) sensors are generally more robust but can still face interference from strong infrared sources.

Can RGB-D cameras detect glass or transparent surfaces?

Generally, no. Most depth technologies (IR projection or ToF) pass through clear glass or reflect unpredictably off mirrors, resulting in "holes" in the depth map. Mobile robots usually require sonar or specific software filters to safely navigate around glass walls.

What is the typical range of an RGB-D camera for robotics?

Most commercial RGB-D cameras (like Intel RealSense or OAK-D) are optimized for near-field interaction, typically ranging from 0.2 meters up to 5 or 10 meters. Beyond this range, depth accuracy degrades significantly compared to LiDAR.

Do I need a GPU to process RGB-D data?

Processing 3D point clouds is computationally intensive. While basic obstacle avoidance can run on a standard CPU, applications involving semantic segmentation, object recognition, or dense vSLAM usually require an onboard GPU (like an NVIDIA Jetson module) for real-time performance.

What is the difference between Structured Light and Stereoscopic depth?

Stereoscopic vision calculates depth using two lenses, similar to human eyes, and works well outdoors but needs textured surfaces. Structured Light projects a known pattern onto the scene to calculate depth; it offers high precision for featureless walls but struggles in bright sunlight.

How are RGB-D cameras calibrated?

They require both intrinsic calibration (lens distortion, focal length) and extrinsic calibration (position relative to the robot base). While many come factory-calibrated, robust AGV fleets often run periodic re-calibration routines to ensure the depth map aligns perfectly with the robot's movement model.

Is it possible to use multiple RGB-D cameras on one robot?

Yes, multi-camera setups are common for 360-degree coverage. However, interference can occur if multiple cameras project IR patterns on the same surface. This is solved using hardware synchronization cables or by staggering the firing timing of the emitters.

What bandwidth interfaces are required?

RGB-D streams generate massive amounts of data. USB 3.0 or Gigabit Ethernet (GigE) are standard requirements. USB 2.0 is insufficient for transmitting high-resolution color and depth streams simultaneously at a usable frame rate (30fps+).

How does "Blind Spot" affect AGV safety?

Every camera has a minimum sensing distance (e.g., 20cm). If an obstacle is closer than this, the robot cannot see it. Engineers mitigate this by recessing the camera into the robot chassis or using complementary sensors (ultrasonic or bumpers) for the immediate proximity zone.

Are RGB-D cameras durable enough for industrial environments?

Consumer-grade sensors may fail due to vibration or dust. Industrial-grade RGB-D cameras feature IP65/67 rated enclosures, screw-locking cables (GMSL or Ethernet), and shock-resistant mountings specifically designed for warehouse AGVs and forklifts.

Can RGB-D replace safety scanners (Safety LiDAR)?

Not usually for PL-d/Category 3 safety certification. While AI vision is improving, certified Safety LiDARs are deterministic and fail-safe. RGB-D is typically used for navigation and navigation-level obstacle avoidance, while Safety LiDAR acts as the ultimate emergency stop mechanism.