Point Cloud Processing

Unlock the third dimension for your autonomous fleet. Transform raw LiDAR and depth sensor data into precise, navigable 3D maps that enable AGVs to detect obstacles, recognize objects, and operate safely in complex dynamic environments.

Core Concepts

Voxelization

The process of downsampling massive point clouds into a grid of 3D cubes (voxels). This reduces computational load while preserving the geometric structure needed for navigation.

Segmentation

Algorithmically separating points into distinct clusters. For AGVs, this is critical to distinguish the "drivable floor" from walls, pallets, and moving obstacles.

Registration (ICP)

Iterative Closest Point algorithms align multiple scans into a single coordinate system. This allows the robot to build a consistent map as it moves through the warehouse.

Noise Filtering

Removing outliers and "ghost points" caused by dust, reflective surfaces, or sensor errors. Clean data ensures the AGV doesn't stop for phantom obstacles.

Feature Extraction

Identifying geometric shapes like planes, cylinders, or edges within the cloud. This helps robots recognize specific landmarks like racking columns or charging stations.

KD-Trees

A space-partitioning data structure used to organize points. It drastically speeds up nearest-neighbor searches, essential for real-time collision avoidance calculations.

How It Works

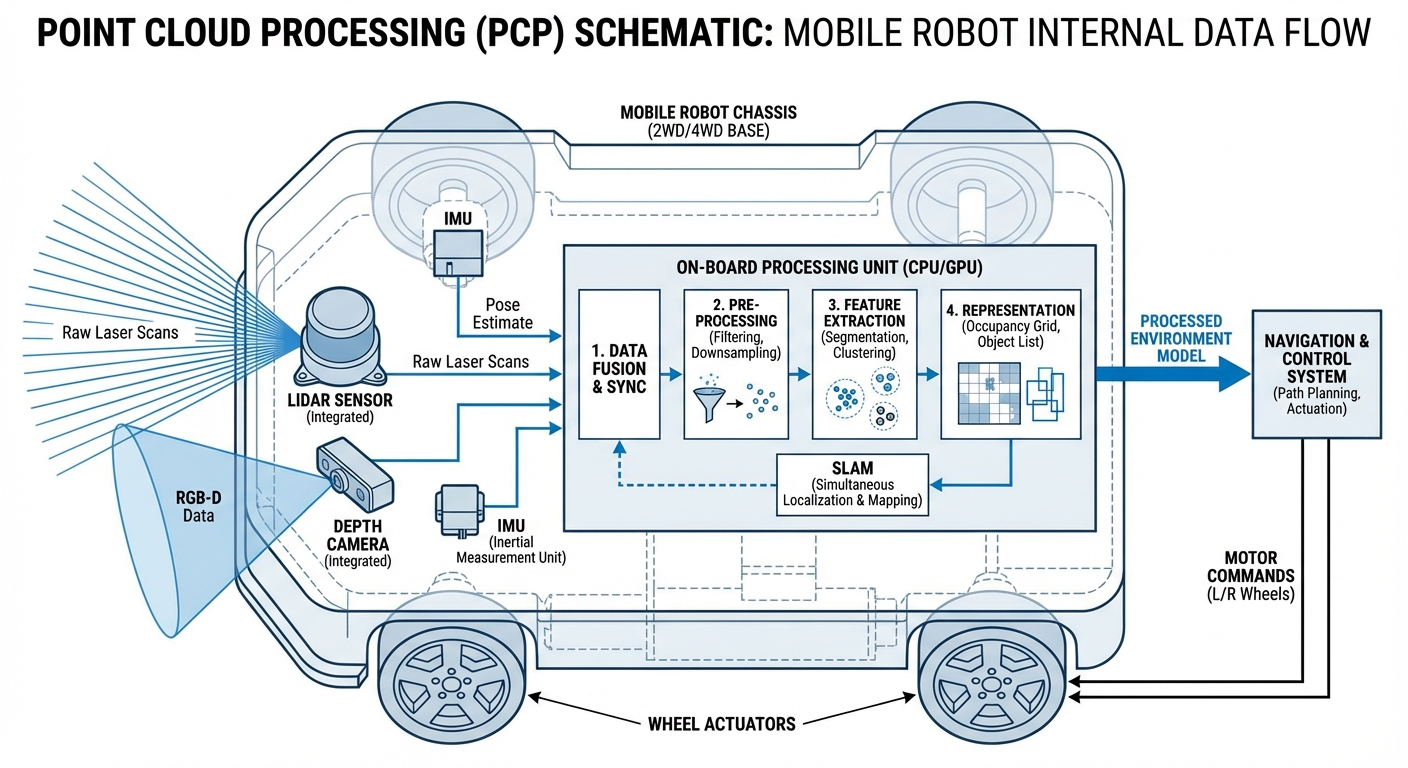

Point cloud processing begins with Data Acquisition using LiDAR sensors or stereo cameras. These sensors fire laser pulses or capture disparate images to measure the distance to every surface in the environment, generating millions of X, Y, Z coordinates per second.

Once captured, the raw data undergoes Pre-processing. This involves downsampling to reduce data density and filtering to remove sensor noise. The cleaned data is then passed through segmentation algorithms which separate the ground plane from obstacles.

Finally, the processed cloud is used for Localization and Mapping (SLAM). By matching current scans against a stored map, the AGV determines its exact position with centimeter-level accuracy, allowing for safe trajectory planning around static and dynamic objects.

Real-World Applications

High-Bay Warehouse Logistics

AGVs use point clouds to detect pallet pockets at heights of 10+ meters. By identifying the exact plane and orientation of the pallet, forklifts can engage loads safely without manual intervention.

Outdoor Navigation

Unlike 2D laser scanners, 3D point cloud processing allows mobile robots to navigate uneven terrain, detect curbs, and identify overhanging obstacles like tree branches in outdoor yards.

Automated Trailer Loading

Robots scan the interior of semi-trailers to determine available volumetric space. The point cloud data helps the robot calculate the optimal placement for each pallet to maximize fill rate.

Dynamic Safety Zones

Instead of static safety fields, point cloud processing enables "volumetric safety." Robots can distinguish between a human worker (requiring a stop) and a hanging curtain or dust particle (safe to proceed).

Frequently Asked Questions

What is the difference between 2D LiDAR and 3D Point Cloud processing?

2D LiDAR scans a single horizontal slice of the environment, providing a flat map. 3D Point Cloud processing utilizes sensors that scan multiple layers or the entire field of view, creating a volumetric representation. This allows AGVs to see obstacles below or above the sensor line, such as table legs or overhanging shelves, which a 2D sensor might miss.

Does point cloud processing require a GPU on the robot?

For high-resolution, real-time processing, a GPU (like NVIDIA Jetson modules) is highly recommended to handle parallel processing tasks like filtering and segmentation. However, simpler operations on heavily downsampled clouds (voxelized data) can often be run on powerful multi-core CPUs found in standard industrial PCs.

How does the system handle transparent or reflective surfaces?

Transparent surfaces (glass) and highly reflective surfaces (mirrors, polished metal) are notoriously difficult for LiDAR, often resulting in "holes" or scattered points. Advanced processing pipelines use intensity filtering and multi-echo returns to identify these anomalies, or fuse data with sonar/ultrasonic sensors which are less affected by light properties.

What is Voxel Grid filtering and why is it used?

Voxel Grid filtering is a downsampling technique where space is divided into 3D cubes (voxels). All points within a single voxel are approximated by their centroid. This significantly reduces the dataset size (e.g., from 100,000 points to 5,000 points) while maintaining the overall shape of the environment, making real-time navigation computationally feasible.

Can point cloud data be used for semantic understanding?

Yes. By applying Deep Learning models (like PointNet) to point clouds, the robot can not only see that an object exists but classify it. The robot can distinguish between a "human," a "forklift," or a "wall," allowing it to apply different behavioral rules, such as slowing down for humans but stopping for walls.

What software libraries are commonly used for this?

The Point Cloud Library (PCL) is the industry standard open-source library for 2D/3D image and point cloud processing. Open3D is another modern library that is gaining popularity for its Python integration and ease of use. ROS (Robot Operating System) also provides extensive wrappers for these libraries.

How much data storage does a point cloud map require?

Raw point cloud data is very heavy (hundreds of MBs per second). However, for mapping, the data is converted into efficient formats like Octomaps or saved as keyframe scans. A mapped warehouse of 10,000 sq meters might only take up a few hundred megabytes on the robot's disk once optimized.

How does latency affect high-speed AGVs?

Latency is critical. If processing takes 200ms, a robot moving at 2m/s will have traveled 0.4 meters before reacting to the data. To mitigate this, engineers use predictive control algorithms that account for processing delay, or they dedicate specific hardware (FPGAs) to handle safety-critical obstacle detection instantly.

What happens if the environment changes drastically?

This is the "kidnapped robot problem" or map maintenance issue. Point cloud localization is generally robust to small changes (moving boxes). For drastic changes (racking layout shifts), the robot must be able to perform continuous mapping or use "long-term SLAM" features to update its reference map dynamically.

Is it better to use RGB-D cameras or LiDAR?

LiDAR offers superior range (up to 100m+) and accuracy regardless of lighting conditions, making it ideal for primary navigation. RGB-D (Depth) cameras provide rich color data and dense clouds but have limited range (usually <10m) and struggle in direct sunlight. Most advanced AGVs use sensor fusion to combine the strengths of both.

How is ground segmentation performed on slopes or ramps?

Simple plane fitting (RANSAC) fails on ramps. Advanced algorithms use surface normal estimation or connectivity analysis. They look at the angle of adjacent points; if the change in angle is gradual, it's considered a ramp/floor. If the angle is steep (near 90 degrees), it is classified as an obstacle.

Does point cloud processing increase battery consumption?

Yes. 3D LiDAR sensors consume more power than 2D scanners, and the heavy computing required to process 3D data drains the battery faster. However, the efficiency gains from smoother paths, fewer stops, and faster operation speeds usually outweigh the electrical cost of the processing hardware.