ORB-SLAM

A versatile and accurate visual SLAM solution empowering AGVs to navigate complex environments without expensive LiDAR. Unlock precise localization and sparse mapping using standard camera hardware.

Core Concepts

Feature Extraction

Uses ORB (Oriented FAST and Rotated BRIEF) features to identify distinct points in an image. These binary features are computationally efficient and invariant to rotation and scale.

Local Mapping

Constructs a local map of the immediate surroundings by triangulating ORB features from new keyframes. This process runs in parallel to tracking to ensure real-time performance.

Loop Closure

Detects when the AGV returns to a previously visited location. This is critical for correcting accumulated drift (odometry error) and ensuring global map consistency.

Pose Estimation

Calculates the robot's precise position and orientation (6-DoF) by matching current view features against the local map, allowing for accurate path following.

Bundle Adjustment

Uses graph optimization (g2o) to minimize reprojection errors. It refines camera poses and 3D point locations simultaneously for maximum accuracy.

Keyframe Selection

Intelligently selects the most useful frames to keep in the map while discarding redundant ones. This keeps the memory footprint low even during long-duration operations.

How It Works

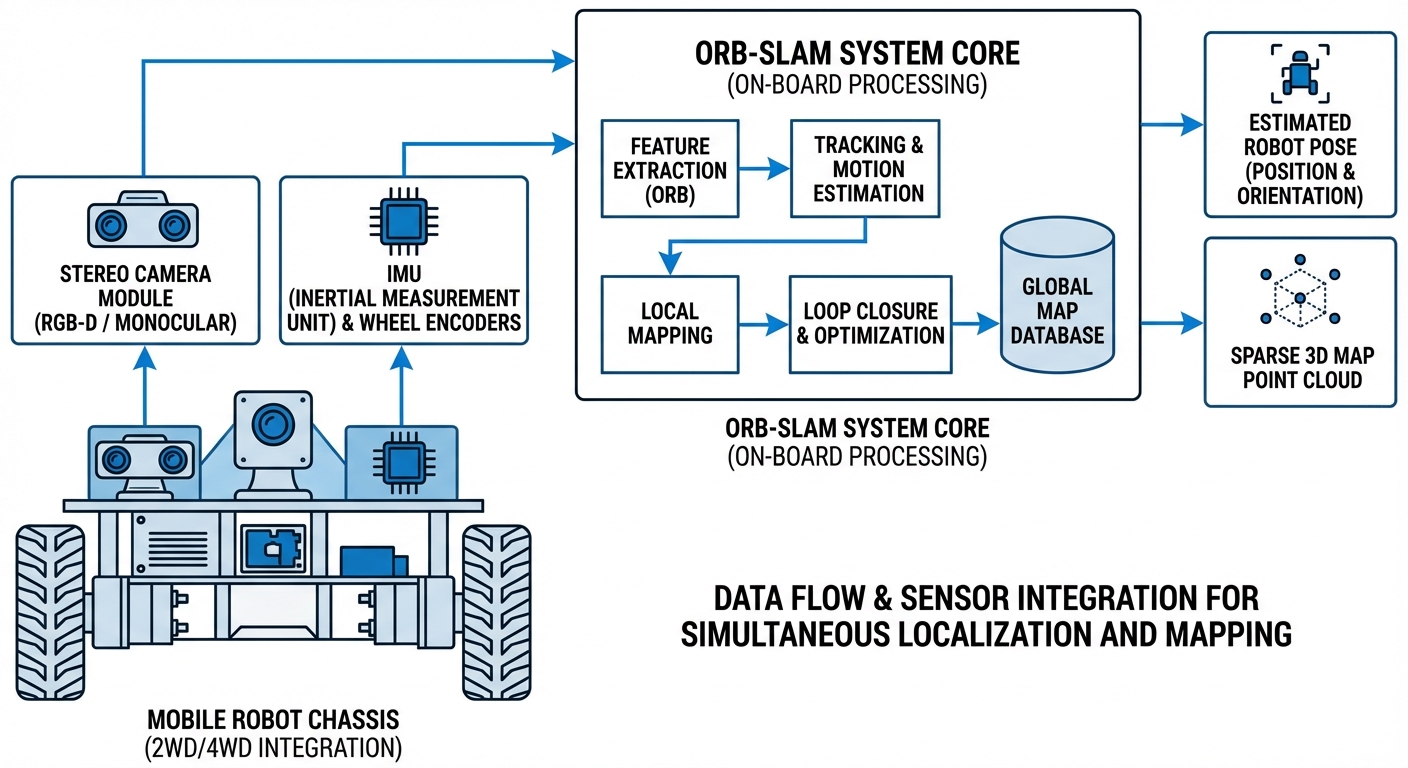

ORB-SLAM operates through a multi-threaded architecture that separates tracking, local mapping, and loop closing. At its core, it processes video input (Monocular, Stereo, or RGB-D) to extract "ORB" features—high-contrast corners in the image described by binary vectors.

As the AGV moves, the system tracks these features from frame to frame to estimate velocity and orientation. Simultaneously, a separate thread builds a sparse 3D point cloud of the environment, deciding which "Keyframes" provide new information worth saving.

If the robot revisits an area, the "Loop Closing" thread recognizes the scene using a bag-of-words approach. It then forcefully corrects the entire map to align the current position with the historical data, eliminating the "drift" that commonly plagues wheel-encoder-based navigation.

Real-World Applications

GPS-Denied Warehousing

Perfect for indoor forklifts and AGVs operating in large warehouses where GPS signals cannot penetrate. ORB-SLAM provides cm-level localization accuracy using affordable cameras rather than expensive laser scanners.

Hospital Service Robots

Delivery and disinfection robots rely on ORB-SLAM to navigate narrow corridors and dynamic environments. The visual nature helps distinguish landmarks in sterile environments with few geometric features.

Drone Inspection

UAVs performing infrastructure inspection inside tanks, tunnels, or under bridges use ORB-SLAM for stabilization and mapping when external positioning systems fail.

Last-Mile Delivery

Sidewalk robots utilize stereo ORB-SLAM to gauge depth and avoid pedestrians while building a reusable map of urban neighborhoods for repeated delivery routes.

Frequently Asked Questions

What is the difference between ORB-SLAM2 and ORB-SLAM3?

ORB-SLAM3 is the successor to ORB-SLAM2 and introduces Visual-Inertial SLAM (VI-SLAM). It tightly integrates IMU (Inertial Measurement Unit) data with visual data, providing much higher robustness during fast motions or low-texture situations where cameras alone might fail. It also supports multi-map merging.

Does ORB-SLAM require a Stereo Camera or can it use Monocular?

It supports Monocular, Stereo, and RGB-D configurations. However, Monocular SLAM suffers from "scale ambiguity" (it doesn't know the true size of objects). For AGVs requiring precise metric navigation, Stereo or RGB-D is recommended to obtain real-world scale automatically.

How does ORB-SLAM compare to LiDAR-based SLAM (e.g., GMapping)?

LiDAR SLAM is generally more accurate in geometry and lighting independence but requires expensive sensors. ORB-SLAM is a "Visual SLAM" method that is much more cost-effective and provides a richer semantic understanding of the scene, though it is more sensitive to lighting conditions and textureless walls.

Is ORB-SLAM computationally expensive for embedded hardware?

It is relatively efficient due to the use of binary features, but it is still CPU intensive. Running it on a Raspberry Pi is difficult for real-time applications; typically, an NVIDIA Jetson, Intel NUC, or similar edge AI computer is recommended for smooth operation on an AGV.

How does it handle dynamic environments (moving people/forklifts)?

Standard ORB-SLAM assumes a static environment. While it has outlier rejection schemes that can ignore small moving objects, significant dynamic activity (like a crowded warehouse aisle) can degrade tracking accuracy. In these cases, it is often combined with dynamic object masking algorithms.

What happens if the robot gets "Lost"?

ORB-SLAM has a relocalization module. If tracking is lost (e.g., camera covered or lights turn off), the system switches to relocalization mode. Once the camera sees a previously mapped area with recognizable features, it snaps the robot's pose back to the correct location on the map.

Can I save and reuse the map generated by ORB-SLAM?

Yes. The sparse map (point cloud and keyframes) can be serialized and saved to disk. When the robot starts up the next day, it can load this map and instantly localize itself without having to explore the environment from scratch.

Is there ROS (Robot Operating System) integration?

Yes, there are well-maintained ROS and ROS2 wrappers for ORB-SLAM. This makes it easy to integrate into a standard robotics stack, publishing Pose and Odometry topics that navigation stacks (like Nav2) can consume.

What kind of camera features are ideal for ORB-SLAM?

Global shutter cameras are highly recommended over rolling shutter cameras to prevent image distortion during motion. A wide field of view (FOV) is also beneficial as it allows features to remain in the frame longer, improving tracking stability.

What are the limitations in low-texture environments?

ORB-SLAM relies on extracting corners and distinct points. In environments with plain white walls or repetitive floor patterns, it may fail to find enough features to track. In such cases, Visual-Inertial solutions or adding artificial fiducial markers (Apriltags) to the environment helps.

Is ORB-SLAM open source for commercial use?

The original ORB-SLAM2 and ORB-SLAM3 are released under the GPLv3 license. This generally means if you distribute software using it, you must open-source your code. For proprietary commercial products, you may need to negotiate a commercial license with the authors.

How dense is the map created by ORB-SLAM?

ORB-SLAM creates a "Sparse" map, meaning it only tracks specific feature points, not a full 3D mesh. While excellent for localization, this sparse cloud is often insufficient for obstacle avoidance, requiring a separate depth camera or simple sonar array for collision prevention.

Ready to implement ORB-SLAM in your fleet?

Our AGV platforms come pre-configured with advanced visual SLAM capabilities, reducing your development time from months to days.

Explore Our Robots