Loop Closure Detection

Eliminate drift in your AGV's positioning system by recognizing previously visited locations. Loop closure is the critical component in SLAM that transforms a drifting trajectory into a globally consistent map for precise autonomous navigation.

Core Concepts

Drift Correction

Corrects accumulated errors from wheel odometry and IMU sensors. Without loop closure, the estimated position of the robot diverges indefinitely from its true position over time.

Place Recognition

Uses visual features (Bag of Words) or LiDAR scan matching to identify if the current environment signature matches a record in the historical database.

Pose Graph Optimization

Once a loop is detected, the algorithm creates a constraint edge in the pose graph. Optimization techniques (like g2o or GTSAM) then redistribute the error across the entire trajectory.

Perceptual Aliasing

Addresses the challenge where two distinct locations look identical (e.g., identical warehouse aisles). Robust geometric verification is required to prevent false positive closures.

Global Consistency

Ensures that the generated map is topologically correct. Start and end points align perfectly, allowing for reliable long-term path planning and navigation.

Multi-Session SLAM

Enables robots to merge maps from different operating times. A robot can "wake up," recognize where it is based on previous maps, and continue operation seamlessly.

How It Works

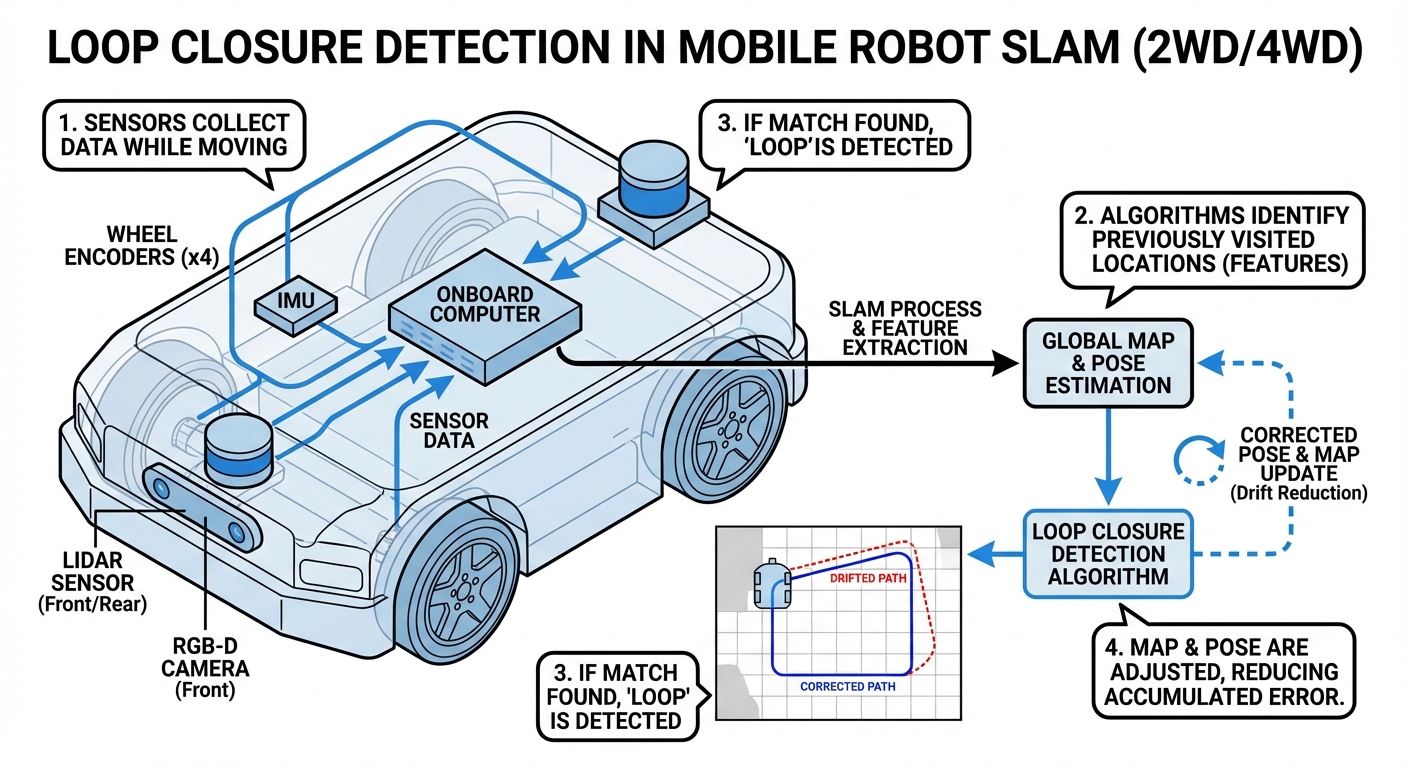

Loop closure detection operates in the background of the SLAM (Simultaneous Localization and Mapping) process. As the AGV moves, it continuously scans its environment using LiDAR or stereo cameras, extracting key geometric features or visual descriptors.

These features are converted into a simplified signature and queried against a historical database of locations the robot has previously visited. When a high-confidence match is found, the system calculates the relative transformation between the current pose and the historical pose.

Finally, a "loop" constraint is added to the pose graph. A back-end optimization solver minimizes the total error in the graph, effectively "snapping" the current trajectory back to the known map and correcting accumulated drift errors instantly.

Real-World Applications

Large-Scale Warehousing

In facilities spanning over 100,000 sq ft, wheel slippage causes significant odometry drift. Loop closure allows AGVs to reset their position error every time they pass a known intersection or charging station.

Dynamic Factory Floors

Factories change layout frequently with moving pallets and personnel. Robust loop closure ensures robots can re-localize even when up to 60% of the environment's visual features have been occluded or moved.

Hospital Logistics

Hospitals feature long, repetitive corridors that look identical (perceptual aliasing). Advanced loop closure uses distinct landmarks (nurses' stations, artwork) to differentiate between floors and wings accurately.

Outdoor Patrol Robots

GPS signals can be blocked by tall buildings or trees. Loop closure allows outdoor security robots to maintain an accurate map of a perimeter by recognizing key landmarks like gates or building corners.

Frequently Asked Questions

What is the difference between Loop Closure and Local Localization?

Local localization (odometry or scan matching) tracks the robot's movement incrementally from the previous frame, which accumulates small errors over time. Loop closure is a global process that recognizes a place visited long ago, calculating absolute error and correcting the entire map history to eliminate that accumulated drift.

How does the system handle "False Positive" loop closures?

False positives are catastrophic as they warp the map incorrectly. Systems use geometric verification (like RANSAC) to ensure the physical geometry of the matched scenes aligns perfectly, and temporal consistency checks to ensure a match persists over several frames before accepting the loop.

Does Loop Closure work in changing lighting conditions?

Visual SLAM systems can struggle with lighting changes, but modern descriptors (like ORB or learned features) are designed to be somewhat invariant to brightness. LiDAR-based loop closure is generally immune to lighting conditions, making it superior for 24/7 operations in varying light.

What is "Perceptual Aliasing" and why is it a risk?

Perceptual aliasing occurs when two different places look identical to the sensors, such as two identical aisles in a warehouse. If the robot incorrectly closes a loop between these two places, the map will fold over on itself; we prevent this using covariance checks and graph consistency tests.

How computationally expensive is Loop Closure Detection?

Searching the entire database of past frames is expensive. To manage this, we use techniques like Bag-of-Words (BoW) for fast retrieval and limit the search space to keyframes within a certain radius of the robot's estimated position, allowing it to run in real-time on embedded hardware.

Can Loop Closure help if the robot is "kidnapped"?

Yes, this is known as global relocalization. If a robot is picked up and moved (kidnapped), it loses its local track. Loop closure algorithms run globally, identifying the new location based purely on sensor data, allowing the robot to snap back to the correct position on the map.

Which sensors are best for Loop Closure: LiDAR or Camera?

Cameras provide rich texture information, making them excellent for distinguishing places that look geometrically similar but have different signs or colors. LiDAR provides precise geometry, making it better for large structural environments. Sensor fusion often yields the best results.

What happens to the map when a loop is closed?

When a loop is closed, a graph optimization algorithm (like Levenberg-Marquardt) is triggered. It distributes the accumulated error effectively along the whole trajectory, causing the map to shift and align, closing gaps and correcting the paths of walls and obstacles.

Does Loop Closure work in highly dynamic environments?

Dynamic environments (people walking, forklifts moving) pose a challenge. Effective algorithms filter out dynamic objects from the scan or image data before processing, relying only on static features like walls, pillars, and racking for reliable place recognition.

What is Bag-of-Words (BoW) in this context?

Bag-of-Words is a technique adapted from text search. It converts an image's visual features into a "vocabulary" of numerical descriptors. This allows the system to compare the current view to thousands of past views extremely quickly by checking for shared "words" (features).

How frequently should loop closure be attempted?

It is typically performed on "Keyframes," which are selected based on distance or rotation thresholds (e.g., every 1 meter or 20 degrees). Attempting it every single frame is computationally wasteful and unnecessary, as drift accumulates slowly.

Can Loop Closure fail?

Yes. If the environment is completely featureless (a long blank corridor) or if the robot travels a path with no intersections or returns, loops cannot be detected. In these cases, the system relies entirely on the accuracy of the local odometry sensors.