LiDAR SLAM

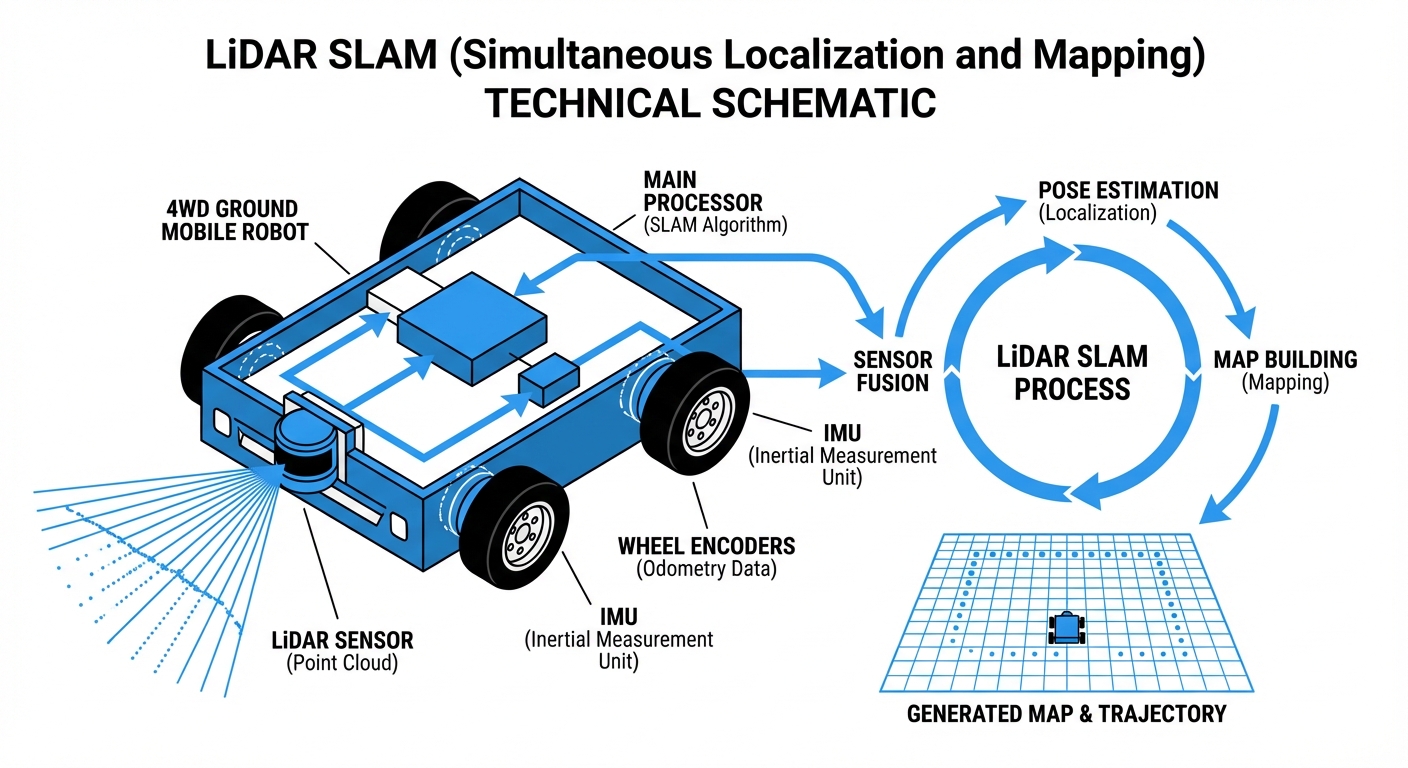

Simultaneous Localization and Mapping enables Autonomous Guided Vehicles (AGVs) to navigate complex environments without physical guides. By using laser data to construct a map while tracking location, LiDAR SLAM delivers centimeter-level precision for modern automation.

Core Concepts

Point Clouds

The raw data generated by the LiDAR sensor. Thousands of laser pulses per second create a dense set of geometric points representing the robot's immediate surroundings.

Pose Estimation

The algorithm calculates the robot's position (x, y) and orientation (theta) relative to the map by analyzing the shift in scan data between time steps.

Scan Matching

A critical process where the current LiDAR scan is aligned with previous scans or the global map (using algorithms like ICP or NDT) to determine movement.

Loop Closure

The ability to recognize a previously visited location. This triggers an optimization that "closes the loop," correcting accumulated drift errors in the map.

Occupancy Grid

The resulting map format, typically a 2D grid where each cell holds a probability value representing whether that space is free, occupied, or unknown.

Sensor Fusion

LiDAR data is rarely used alone. SLAM algorithms fuse it with Odometry (wheel encoders) and IMU data to maintain accuracy during rapid movements.

How It Works

The SLAM process begins with the LiDAR sensor emitting pulsed light waves into the environment. These pulses bounce off obstacles—walls, racks, machines—and return to the sensor. By calculating the Time of Flight (ToF), the robot determines the exact distance to every point in a 360-degree radius.

As the AGV moves, the SLAM algorithm compares the new scan against the previous one (Scan Matching). It looks for overlapping geometric features to calculate how far and in what direction the robot has traveled. This effectively turns a relative measurement into a global position.

Simultaneously, the robot updates its internal map. If it encounters a new obstacle, it adds it to the Occupancy Grid. If a known obstacle is missing (like a moved pallet), the map is dynamically updated, allowing for flexible navigation in changing industrial environments.

Real-World Applications

Warehouse Intralogistics

AMRs (Autonomous Mobile Robots) utilize SLAM to navigate dynamic aisles where pallets and human workers are constantly moving, enabling efficient goods-to-person picking without magnetic tape guides.

Manufacturing Assembly

LiDAR-equipped tuggers deliver parts to assembly lines. SLAM allows these robots to re-route instantly if a path is blocked by forklifts or machinery, preventing line stoppages.

Healthcare Delivery

Hospital robots use precise SLAM to navigate narrow corridors and elevators, delivering linen, medicine, and meals while safely avoiding patients and sensitive medical equipment.

Hazardous Inspection

In environments dangerous to humans, such as chemical plants or nuclear facilities, SLAM enables robots to map the area and conduct inspections autonomously with high reliability.

Frequently Asked Questions

What is the difference between SLAM and Laser Guidance (LGV)?

Laser Guidance typically requires installing reflective targets (triangulation) on walls or columns. SLAM uses natural features (walls, racks, machines) and does not require modifying the infrastructure, making deployment faster and more flexible.

How does LiDAR SLAM handle dynamic environments with moving people?

Robust SLAM algorithms filter out transient data. By checking the consistency of objects over time, the system identifies moving entities (people, forklifts) as dynamic obstacles rather than permanent map features, allowing the robot to navigate around them without corrupting the map.

Does LiDAR SLAM work in complete darkness?

Yes. Since LiDAR is an active sensor that emits its own light (laser pulses), it is completely independent of ambient lighting. It functions perfectly in pitch-black warehouses or dimly lit factory floors, unlike visual SLAM cameras.

What happens if the environment changes significantly (e.g., layout change)?

Minor changes (moved pallets) are handled dynamically. However, significant structural changes (moving walls or racking) usually require a "remap" or a partial map update. Most modern fleet management software allows for partial map stitching to update only changed areas.

Can LiDAR SLAM detect glass or transparent surfaces?

This is a known limitation. Lasers pass through glass or reflect unpredictably off mirrors. To mitigate this, sonar or ultrasonic sensors are often fused with LiDAR data, or "virtual walls" are drawn on the digital map to prevent the robot from approaching glass barriers.

What is the typical localization accuracy of 2D LiDAR SLAM?

In a standard industrial environment with sufficient static features, 2D LiDAR SLAM typically achieves a localization accuracy of ±1cm to ±5cm. This precision is sufficient for most docking, charging, and pallet handling operations.

How does the "Kidnapped Robot Problem" apply here?

If a robot is physically moved while powered off, it wakes up unaware of its location. Modern SLAM uses global localization algorithms (like Monte Carlo Localization) to match the current scan against the entire known map to re-triangulate its position effectively.

Is 3D LiDAR necessary, or is 2D sufficient?

For flat indoor floors (warehouses), 2D LiDAR is the industry standard due to lower cost and lower computational load. 3D LiDAR is preferred for outdoor terrain, ramps, or environments with overhanging obstacles that a 2D planar scan might miss.

How computationally expensive is LiDAR SLAM?

It is moderately intensive. While less demanding than processing HD video for Visual SLAM, it still requires a dedicated industrial PC or embedded board (like NVIDIA Jetson or Raspberry Pi 4+) to process scan matching and path planning in real-time (20Hz+).

Can LiDAR SLAM work in long, featureless corridors?

Long corridors create a "geometric degeneracy" where scans look identical as the robot moves forward. To solve this, SLAM relies heavily on wheel odometry and IMU data during these segments until unique features are detected again.

What maintenance does the LiDAR sensor require?

Maintenance is minimal but critical. The lens must be kept clean of dust and oil, as obstructions can create "ghost" obstacles. Mechanical LiDARs also have moving parts that wear over time, whereas solid-state LiDARs are maintenance-free.

Does SLAM replace safety sensors?

No. SLAM is for navigation. Safety is handled by certified safety PLCs and dedicated safety laser scanners (often yellow) that cut power to the motors immediately if a field is breached, regardless of what the SLAM map says.