Instance Segmentation

Unlock pixel-level understanding for your mobile fleet. Instance segmentation enables AGVs to not only recognize objects but to distinguish between individual entities of the same class, revolutionizing picking accuracy and dynamic navigation.

Core Concepts

Pixel-Level Masks

Unlike bounding boxes, instance segmentation assigns a binary mask to every pixel of an object, defining its exact shape and contour for precise interaction.

Object Distinction

The system separates "Forklift A" from "Forklift B". This is critical for predicting independent trajectories of multiple moving agents in a shared space.

Mask R-CNN & YOLO

Leverages state-of-the-art architectures like Mask R-CNN or YOLOv8-seg to balance inference speed with segmentation accuracy on edge devices.

Occlusion Handling

Advanced algorithms can infer the shape of objects even when they are partially blocked by shelving units or other machinery.

Grasping Point Analysis

By determining the exact silhouette of an item, robotic arms on mobile platforms can calculate viable grasping points with high reliability.

Hardware Acceleration

Optimized for GPU and TPU integration, ensuring that complex segmentation tasks do not induce latency in safety-critical navigation loops.

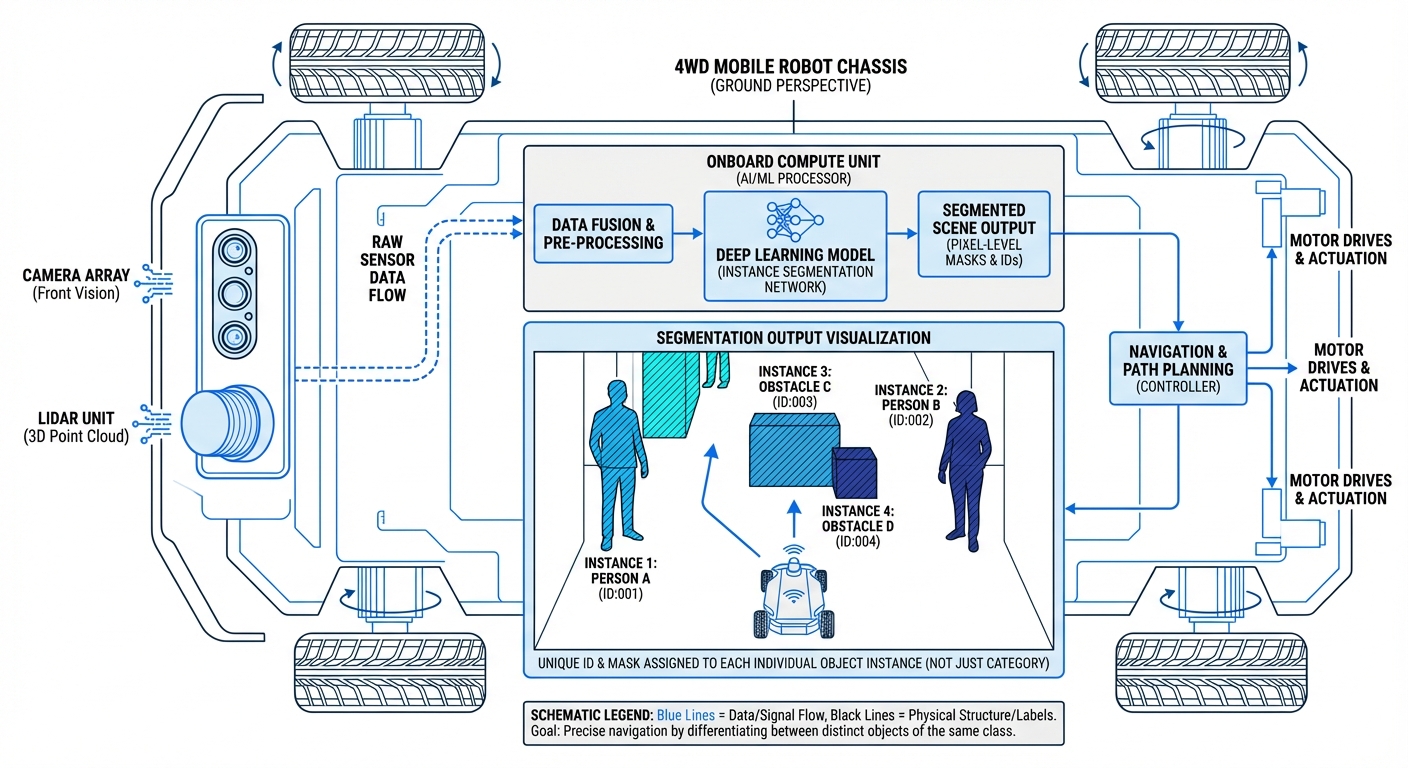

How It Works

Instance segmentation operates by combining object detection with semantic segmentation. First, the AGV's vision system identifies bounding boxes around potential objects of interest, such as pallets, humans, or obstacles.

Within each bounding box, a Fully Convolutional Network (FCN) generates a binary mask. This classifies every specific pixel as either belonging to the object or the background.

The result is a rich semantic map where the robot understands that a cluster of pixels isn't just "obstacle" but specifically "Pallet #43," distinct from "Pallet #44" right next to it. This granularity is essential for tasks requiring physical manipulation or close-quarters maneuvering.

Real-World Applications

Warehouse Pallet Picking

AMRs use instance segmentation to distinguish between tightly packed pallets. This ensures the robot engages with the specific target load without disturbing adjacent inventory.

Agricultural Robotics

In automated harvesting, robots must distinguish individual fruits or plants from the foliage. Instance segmentation provides the exact boundaries needed for delicate picking operations.

Human-Robot Collaboration

Safety systems use segmentation to track the precise limb movements of human workers. This allows robots to slow down or reroute only when necessary, maintaining high throughput.

Defect Detection

On manufacturing lines, inspection robots identify specific scratches or dents on products. Segmentation allows for the measurement of the defect size to determine severity.

Frequently Asked Questions

How does Instance Segmentation differ from Semantic Segmentation?

Semantic segmentation treats all objects of the same class as a single entity (e.g., all "cars" are colored red). Instance segmentation identifies each object separately (e.g., "car 1" is red, "car 2" is blue), which is vital for counting items or interacting with specific objects.

Does Instance Segmentation require heavy GPU resources?

Traditionally, yes, it is more computationally expensive than simple bounding box detection. However, modern lightweight models like YOLOv8-seg or FastSCNN are optimized for edge devices like NVIDIA Jetson, making real-time processing feasible on AGVs.

How does it handle overlapping objects (occlusion)?

Instance segmentation models are trained to predict the full shape of an object based on visible features. This allows the system to infer the boundaries of an object even if it is partially hidden behind another, crucial for cluttered warehouse environments.

What is the typical latency for onboard processing?

Latency depends heavily on the hardware and model complexity. On an NVIDIA Jetson Orin running a standardized model, you can achieve 30-60 FPS (16-33ms latency), which is sufficient for AGVs moving at standard warehouse speeds.

Is annotation for training data more expensive?

Yes, annotating data for instance segmentation requires drawing precise polygons around objects rather than simple boxes. However, the use of synthetic data generation and auto-labeling tools is rapidly reducing this cost and time burden.

Can this technology work with transparent or reflective objects?

Transparent objects like shrink wrap or glass are challenging for standard RGB cameras. We recommend sensor fusion, combining RGB data with depth (RGB-D) or LiDAR intensity data, and training on specific datasets containing these materials.

How does lighting affect segmentation accuracy?

Like all vision systems, extreme contrast or darkness can affect performance. However, segmentation networks are generally more robust to lighting changes than simple feature matchers because they learn contextual shape information, not just color or texture.

What is "Panoptic Segmentation" and should we use it?

Panoptic segmentation combines instance segmentation (things) and semantic segmentation (stuff like floor/walls). For AGVs, it provides the most complete understanding of the scene but is computationally heavier. It is best used when you need to navigate *and* understand the drivable surface simultaneously.

Can we implement this on existing AGV hardware?

This depends on your current compute module. If your AGVs use older PLCs or basic microcontrollers, an upgrade to an AI-accelerated module (like a Jetson or TPU stick) is necessary to handle the neural network inference.

How reliable is it for safety-critical stop functions?

While highly accurate, AI-based segmentation is probabilistic. For safety certification (ISO 13849), it is typically used as a secondary awareness layer alongside certified safety LiDAR scanners and PLCs, rather than the sole safety trigger.

Does it assist with SLAM (Simultaneous Localization and Mapping)?

Yes, this leads to "Semantic SLAM". By identifying dynamic objects (people, forklifts) via segmentation, the SLAM algorithm can filter them out of the map updates, resulting in much cleaner, static maps for long-term navigation.

What is the typical deployment timeline for a custom solution?

For a custom environment (e.g., unique packaging), the process of data collection, annotation, training, and optimization typically takes 4-8 weeks. Using pre-trained models on standard objects (pallets, people) can reduce this to days.