Event Cameras

Revolutionize your AGV's perception with bio-inspired neuromorphic vision. Unlike traditional frame-based sensors, event cameras capture dynamics in microseconds, enabling high-speed navigation and robust obstacle avoidance in extreme lighting conditions.

Core Concepts

Asynchronous Sensing

Pixels operate independently, transmitting data only when intensity changes. This mimics biological retinas, eliminating redundant data processing found in standard frame cameras.

Microsecond Latency

Reaction times are drastically reduced. Event cameras offer temporal resolution in the microsecond range, allowing robots to react to fast-moving obstacles instantly.

High Dynamic Range

With a dynamic range exceeding 120dB, these sensors function perfectly in scenes with both pitch-black shadows and direct sunlight, crucial for warehouse loading docks.

Low Power & Bandwidth

By only transmitting changes in the scene, data bandwidth is significantly reduced. This lowers the computational load and extends the battery life of mobile robots.

No Motion Blur

Because there is no exposure time in the traditional sense, event cameras provide sharp edges even during high-speed rotation or vibration, ensuring accurate SLAM.

Data Sparsity

The output is a sparse stream of events rather than dense matrices. This allows for highly efficient algorithms that only process active parts of the image.

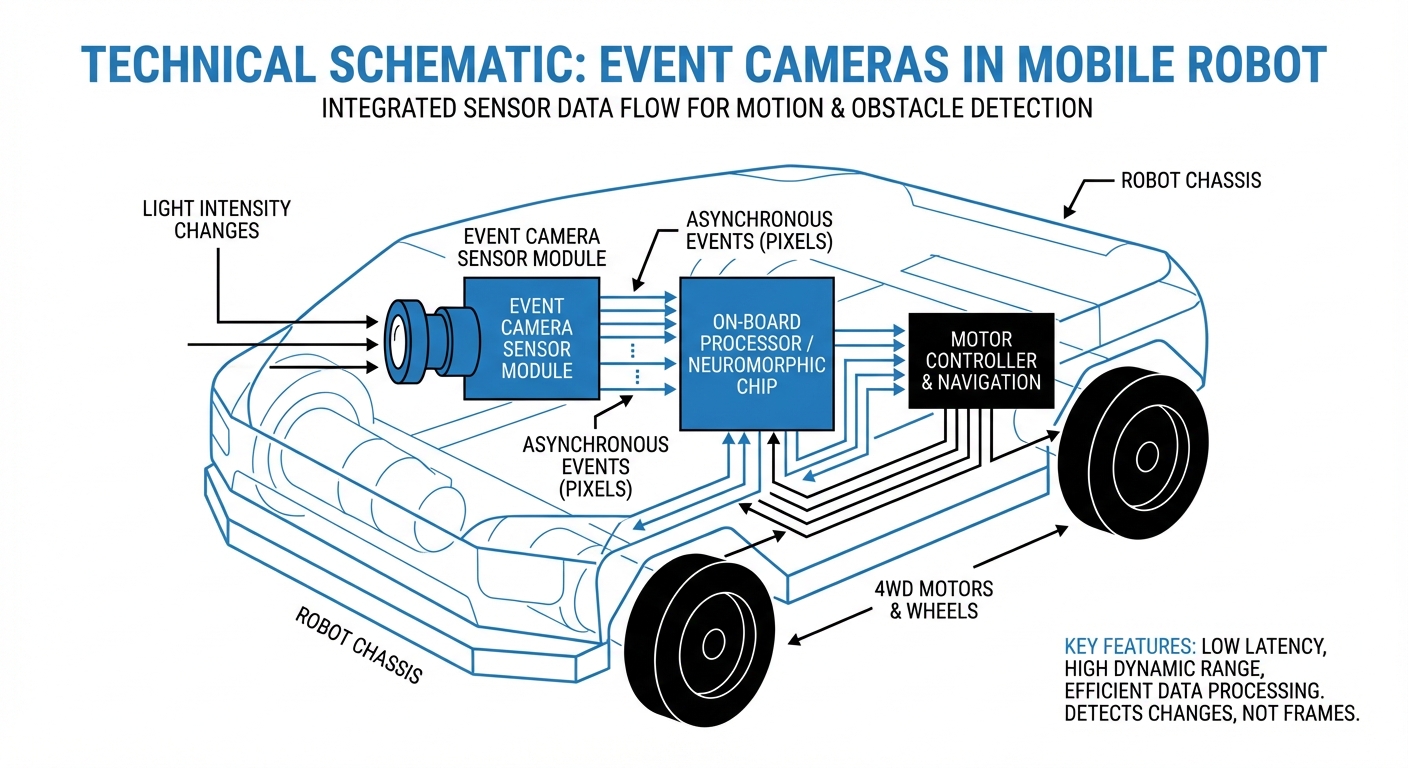

How It Works

Traditional cameras operate stroboscopically, taking snapshots at a fixed frame rate (e.g., 30 fps). This creates blind spots between frames and generates massive amounts of redundant data when the scene is static.

Event cameras, or neuromorphic sensors, work differently. Each pixel adjusts its own sampling rate based on visual changes. When a pixel detects a change in log intensity that exceeds a set threshold, it immediately emits an "event."

This event is a packet containing the pixel coordinates (x, y), the timestamp (t), and the polarity (p) of the change (brighter or darker). The result is a continuous, asynchronous stream of data that represents the true dynamics of the scene with sub-millisecond precision.

Real-World Applications

High-Speed Warehouse Logistics

AGVs equipped with event cameras can navigate aisles at higher speeds without motion blur affecting their localization. They can read barcodes on the fly and detect protruding obstacles instantly, increasing throughput significantly.

Outdoor & Mixed-Light Navigation

For robots moving between dark indoor storage and bright outdoor loading bays, standard cameras get blinded. Event cameras handle these lighting transitions seamlessly, maintaining a lock on visual features for reliable SLAM.

Predictive Maintenance & Inspection

By analyzing high-frequency vibrations that are invisible to the human eye or standard cameras, event-based vision can detect micro-movements in machinery, predicting failures in conveyor belts or robotic arms before they occur.

Safety & Collision Avoidance

The ultra-low latency allows mobile robots to detect and react to falling objects or crossing pedestrians in microseconds. This reaction speed is critical for collaborative environments where humans and robots work side-by-side.

Frequently Asked Questions

How does an event camera differ from a standard global shutter camera?

Standard cameras capture entire image frames at fixed intervals, leading to high latency and motion blur. Event cameras record changes asynchronously at the pixel level, offering microsecond time resolution and eliminating motion blur entirely.

Why are event cameras better for high-speed AGVs?

High-speed AGVs suffer from motion blur with standard sensors, causing localization loss. Event cameras provide a continuous stream of clean edges regardless of speed, ensuring the robot knows its position and detects obstacles without pausing.

Can event cameras see in the dark?

They are exceptionally good in low light. Because they detect relative changes rather than absolute intensity, they can operate in very dark environments (down to 0.08 Lux) without the noise typically associated with high-gain standard sensors.

What happens if the AGV and the scene are completely static?

If absolutely nothing moves and lighting is constant, an event camera generates no data. However, in robotics, micro-vibrations or slight ego-motion of the AGV usually generate enough events to visualize the scene, or it can be paired with a standard frame camera for static intervals.

Do I need a GPU to process event camera data?

Not necessarily. Because the data is sparse (only changes are recorded), processing load can be much lower than frame-based video. Simple obstacle avoidance can run on low-power microcontrollers, though heavy SLAM tasks may still benefit from acceleration.

What is the data format output by these sensors?

The standard output is an Address-Event Representation (AER). It is a stream of tuples containing (x, y, t, p), representing the pixel coordinates, timestamp, and polarity of the brightness change.

Are there existing software libraries for integration?

Yes. Major manufacturers provide SDKs (like Prophesee's Metavision). Additionally, there is growing support in ROS (Robot Operating System) with drivers and packages specifically designed for event-based processing.

How does bandwidth usage compare to standard cameras?

Bandwidth usage is highly variable and content-dependent. In static scenes, it is near zero. In highly dynamic scenes, it increases, but on average, it is significantly lower than uncompressed video streams of equivalent temporal resolution.

Can event cameras replace LiDAR on my robot?

They are often used as a complement rather than a direct replacement. While event cameras offer superior speed and lower cost than 3D LiDAR, LiDAR provides direct depth measurement. Sensor fusion of both yields the most robust navigation stack.

Is calibration difficult for event sensors?

Calibration can be challenging because standard checkerboards don't generate events if held still. Special calibration patterns (flashing) or moving the pattern continuously are required to generate the necessary data for intrinsic calibration.

What is the typical resolution of an event camera?

Resolutions are generally lower than consumer cameras, typically ranging from QVGA (320x240) to 720p HD (1280x720). However, due to the high temporal resolution, this spatial resolution is sufficient for most high-precision robotics tasks.

Are they cost-effective for mass production fleets?

Currently, they are more expensive than standard CMOS sensors due to lower production volumes. However, as adoption in automotive and mobile sectors grows, costs are dropping rapidly, making them viable for commercial AGV fleets.