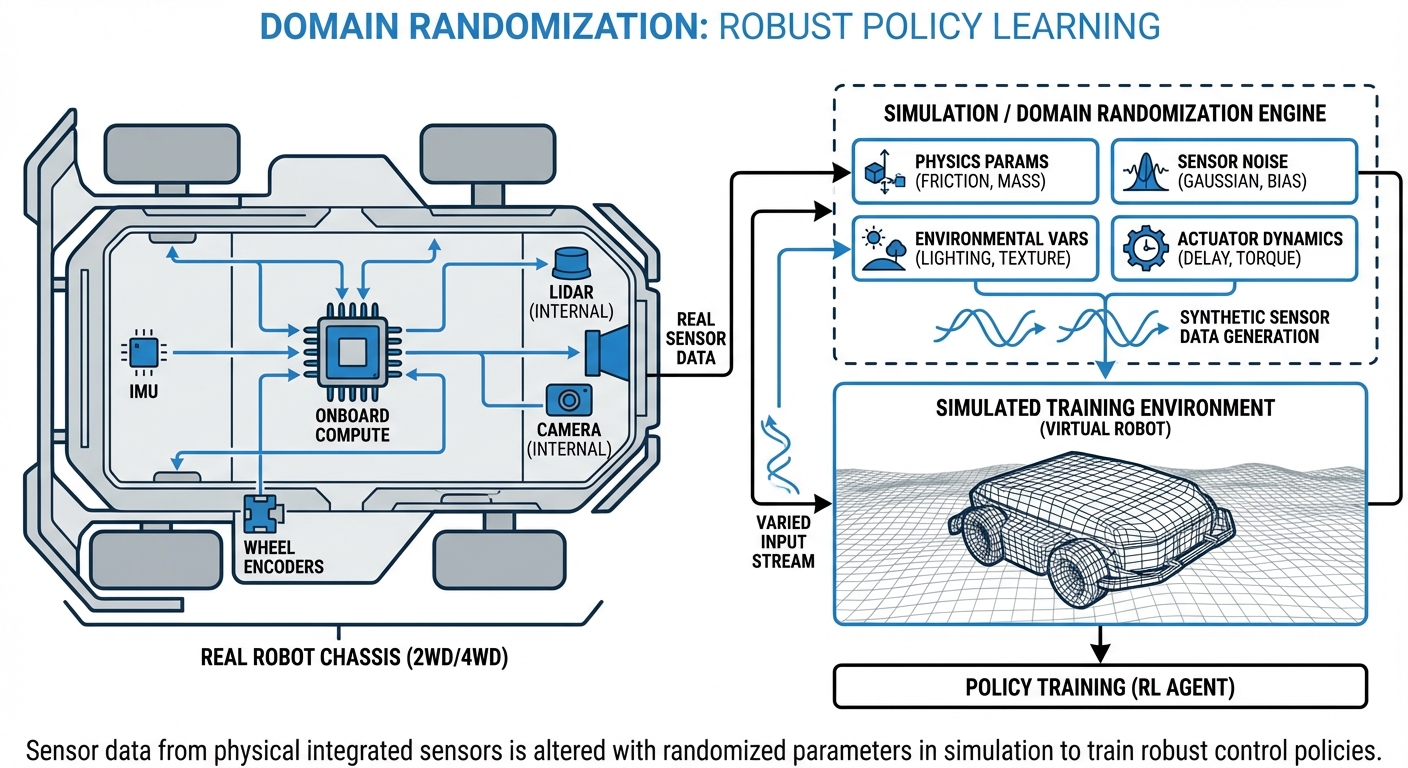

Domain Randomization

Bridge the "Sim-to-Real" gap by training AGVs in highly variable simulated environments. Domain Randomization ensures your mobile robots generalize effectively to the unpredictable nature of the real world, reducing deployment failures.

Core Concepts

Visual Variance

Randomizing textures, colors, and lighting conditions prevents the neural network from overfitting to specific visual markers, forcing it to learn geometric shapes and depth.

Dynamics Randomization

Varying physical properties like friction coefficients, payload mass, and motor damping ensures the control policy remains robust even when hardware degrades.

Sensor Noise

Injecting Gaussian noise into simulated LIDAR and camera feeds mimics real-world sensor imperfections, preventing the robot from trusting simulation-perfect data.

Distractor Objects

Spawning random geometric shapes and "distractors" in the simulation teaches the AGV to ignore irrelevant background clutter and focus on navigation targets.

Procedural Environments

Instead of static maps, walls and obstacles are generated procedurally. This prevents the robot from memorizing a specific layout, encouraging general navigation skills.

Robust Transfer

The ultimate goal: the real world becomes just another variation of the simulation. If the model handles extreme simulated noise, it handles reality with ease.

How It Works

Traditional simulation training often fails because simulation is too perfect. Real-world sensors have grain, motors have slight delays, and lighting is never uniform. Domain Randomization solves this by intentionally degrading the quality of the simulation.

Instead of trying to create one photorealistic simulation (which is computationally expensive and difficult), we generate thousands of "non-realistic" variations. We randomize the color of the floors, the intensity of the lights, and the friction of the wheels in every training episode.

By exposing the neural network to this vast spectrum of parameters, the model learns to identify the invariant features—the things that actually matter for navigation—ignoring the noise. When deployed to a real physical AGV, the robot perceives the real world as just another variation within the distribution it has already seen.

Real-World Applications

Warehouse Logistics

Warehouses have fluctuating lighting (skylights vs LEDs) and varying floor debris. Domain randomization allows AGVs to identify pallets reliably regardless of box color or shadow intensity.

Outdoor Agriculture

Agricultural robots face extreme variability: mud, rain, changing sun angles, and crop growth stages. Randomizing terrain friction and visual foliage density prepares robots for the field.

Last-Mile Delivery

Sidewalk delivery robots encounter different pavement textures, snow, and dynamic obstacles. Simulation training with high randomization ensures stable path planning on unpredictable surfaces.

Industrial Manufacturing

In dynamic factories where equipment moves and layouts change, robots trained with geometry randomization can navigate safely without needing map re-generation for every layout shift.

Frequently Asked Questions

What is the primary difference between Domain Randomization and Photorealistic Simulation?

Photorealistic simulation attempts to match reality perfectly, which is computationally expensive and rarely perfect. Domain Randomization embraces imperfection by varying parameters wildly, teaching the model to ignore discrepancies. It is often more effective for robust policy learning than chasing perfect visual fidelity.

Does Domain Randomization increase training time?

Yes, typically. Because the environment is constantly changing, the neural network requires more training samples (episodes) to converge on a stable policy compared to a static environment. However, the resulting model is significantly more robust and requires less fine-tuning in the real world.

What is Automatic Domain Randomization (ADR)?

ADR is an advanced technique where the difficulty and range of the randomization automatically increase as the robot learns. Instead of randomizing everything from the start, the system creates a "curriculum" of complexity, preventing the agent from being overwhelmed early in training.

Can this technique be used for non-visual sensors like LIDAR?

Absolutely. For LIDAR, we randomize the dropout rate, distance noise, and reflectivity of surfaces. For IMUs (accelerometers/gyroscopes), we randomize bias, white noise, and drift parameters to ensure the localization algorithms don't over-trust the sensors.

How do we define the range of randomization parameters?

This requires domain expertise. The ranges must bound the real-world parameters (e.g., if real friction is between 0.5 and 0.8, simulate 0.2 to 1.0). If the range is too narrow, the policy won't transfer; if it's too wide, the problem may become unsolvable for the AI.

What happens if the real world falls outside the randomized distribution?

This is known as "out-of-distribution" error. The robot will likely fail or behave unpredictably. To mitigate this, engineers usually pad the randomization ranges significantly wider than expected real-world conditions to create a safety buffer.

Is fine-tuning on real hardware still necessary?

Often, yes, but significantly less than training from scratch. Domain Randomization gets the model 90-95% of the way there (zero-shot transfer). A brief period of "real-world adaptation" or fine-tuning is usually performed to polish the movements and accuracy.

Does this work for Reinforcement Learning (RL) only?

While most popular in Deep Reinforcement Learning (RL), Domain Randomization is also used effectively for training Computer Vision models (like object detectors) using synthetic data, reducing the need for manually labeled real-world datasets.

What physics engines are best for Domain Randomization?

High-performance simulators that support parallelization are preferred. NVIDIA Isaac Sim, MuJoCo, PyBullet, and Gazebo are industry standards. Isaac Sim is particularly noted for its photorealistic capabilities combined with fast physics stepping.

How does this affect the cost of robot development?

It reduces hardware wear and tear significantly because crashes happen in simulation, not reality. It also reduces the need for large, expensive annotated real-world datasets. The primary cost shifts to compute power for running simulations.

Can randomizing visual textures confuse the robot?

If the robot relies solely on color detection (e.g., "follow the yellow line"), yes. However, randomization forces the robot to rely on more robust features like edges, depth, and geometry, which actually makes it less confused by lighting changes in the real world.

What is the "Reality Gap"?

The Reality Gap is the discrepancy between the simulator's physics/rendering and the real world laws of physics. It is the main reason models trained in sim fail in real life. Domain Randomization is the primary tool used to close this gap.