3D LiDAR

Unlock true spatial awareness for your mobile robots. 3D LiDAR provides high-fidelity, volumetric mapping and obstacle detection, allowing AGVs to navigate complex, dynamic environments with unmatched precision.

Core Concepts

Point Clouds

The raw output of a 3D LiDAR is a dense collection of data points in 3D space. This "cloud" represents the exact shape and distance of surrounding objects.

Time of Flight (ToF)

The sensor calculates distance by measuring the exact time it takes for a laser pulse to travel to an object and bounce back to the receiver.

6-DoF SLAM

Unlike 2D sensors, 3D LiDAR enables Simultaneous Localization and Mapping (SLAM) with six degrees of freedom, essential for handling ramps and uneven terrain.

Solid State vs. Mechanical

Modern AGVs are moving towards solid-state LiDARs which have no moving parts, offering higher durability and resistance to vibration in industrial settings.

Vertical Resolution

The number of vertical laser channels (e.g., 16, 32, 64, or 128 channels) determines the density of the map and the ability to detect small obstacles like cables.

Semantic Segmentation

Advanced perception algorithms can classify data points within the cloud, distinguishing between walls, pedestrians, other vehicles, and floor debris.

How It Works

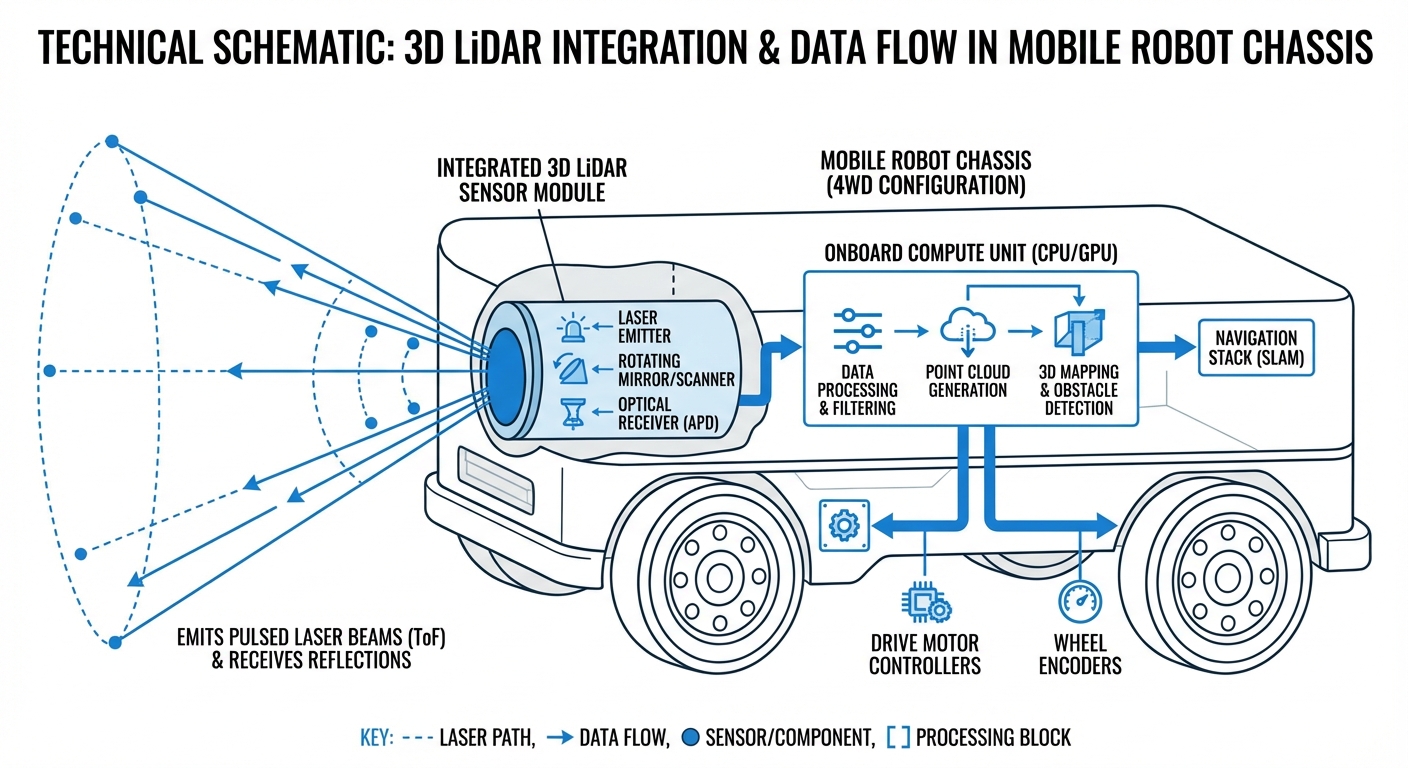

3D LiDAR operates by emitting rapid pulses of laser light—often hundreds of thousands per second. When these pulses hit an object, they reflect back to the sensor. By calculating the phase shift or the time taken for the return trip, the sensor determines the precise distance to that point.

Unlike 2D LiDAR, which scans a single horizontal plane, 3D LiDAR scans across both horizontal and vertical axes. Mechanical versions spin the laser array, while solid-state versions use optical phased arrays or flash technology to steer the beam without macroscopic moving parts.

The result is a comprehensive 3D map updated in real-time. This allows an AGV to "see" overhangs, identify objects hanging from ceilings, navigate ramps, and differentiate between a flat drawing on the floor and a physical obstacle.

Real-World Applications

High-Bay Warehousing

In environments with tall shelving, 2D sensors lose localization data. 3D LiDAR allows AGVs to localize using ceiling structures and detect overhanging pallets that intrude into the aisle.

Outdoor Navigation

For autonomous robots moving between buildings, 3D LiDAR is critical. It differentiates between grass, curbs, and paved paths, and functions reliably in varying lighting conditions where cameras might fail.

Forklift AGVs

Automated forklifts need to engage pallets at varying heights. 3D LiDAR provides the volumetric data required to identify pallet pockets precisely and ensure safe lifting operations.

Crowded Manufacturing Floors

In facilities with heavy foot traffic, 3D perception allows robots to predict pedestrian movement vectors and navigate dynamic obstacles without engaging emergency stops unnecessarily.

Frequently Asked Questions

What is the primary advantage of 3D LiDAR over 2D LiDAR for AGVs?

The primary advantage is volumetric perception. While 2D LiDAR only sees a "slice" of the world at a specific height, 3D LiDAR sees the entire environment, including overhanging obstacles (like tables) and negative obstacles (like loading dock drops or stairs), preventing collisions that a single-plane sensor would miss.

Does 3D LiDAR work in total darkness or bright sunlight?

Yes. Because LiDAR is an active sensor (it emits its own light source), it works perfectly in total darkness. High-quality 3D LiDARs are also designed to filter out ambient sunlight, making them reliable for outdoor use where camera-based systems often struggle with glare or shadows.

What is the difference between Mechanical and Solid-State LiDAR?

Mechanical LiDARs physically spin a laser array to achieve a 360-degree view, which offers great coverage but contains moving parts prone to wear. Solid-state LiDARs steer the beam electronically; they are more durable and compact but often have a narrower Field of View (FoV), sometimes requiring multiple sensors for full coverage.

Does using 3D LiDAR require a more powerful onboard computer?

Generally, yes. Processing 3D point clouds (which can contain hundreds of thousands of points per second) is computationally intensive compared to 2D scan lines. You will likely need an industrial PC with a GPU or a dedicated AI accelerator (like NVIDIA Jetson) to handle 3D SLAM and object detection in real-time.

Can 3D LiDAR detect glass or transparent surfaces?

This remains a challenge for LiDAR technology. Laser pulses often pass through clear glass or reflect off it unpredictably (specular reflection). Most robotics stacks combine LiDAR data with ultrasonic sensors or cameras, or use software-defined "keep-out zones," to handle glass walls effectively.

How does rain or fog affect 3D LiDAR performance?

Heavy rain and fog can introduce "noise" into the point cloud as lasers reflect off droplets. However, modern 3D LiDARs often feature "multi-echo" technology, which can distinguish between a raindrop (first echo) and the wall behind it (last echo), allowing the software to filter out weather interference.

Is 3D LiDAR safe for humans working nearby?

Yes, standard industrial LiDARs are Class 1 laser products, meaning they are eye-safe under all normal conditions of use. They operate at wavelengths that do not damage the human retina, even if looked at directly.

What is the typical range needed for an indoor AGV?

For indoor warehousing, a range of 30 to 50 meters is usually sufficient. This allows the robot to see the end of aisles and localize against distant walls. Outdoor mobile robots typically require ranges of 100 meters or more to safely brake at higher speeds.

Can 3D LiDAR replace safety scanners (PL-d / SIL 2)?

Traditionally, navigation LiDARs were not safety-rated. However, "Safety 3D LiDARs" are entering the market that are certified for functional safety (PL-d). Without a certified sensor, you must use designated 2D safety scanners for the emergency stop circuit, using the 3D LiDAR only for navigation and non-critical obstacle avoidance.

How do I integrate 3D LiDAR with ROS (Robot Operating System)?

Most major LiDAR manufacturers (Velodyne, Ouster, Hesai, Robosense) provide open-source ROS and ROS2 drivers. These drivers publish data in the standard `sensor_msgs/PointCloud2` format, making it compatible with standard packages like PointCloud Library (PCL), OctoMap, and Navigation2.

What are the maintenance requirements?

Physical maintenance is minimal but critical: the lens or window must be kept clean. Dust, oil, or mud on the lens can cause measurement errors. Many outdoor units include automated cleaning systems (wipers or air jets). Mechanical units eventually require motor replacement, whereas solid-state units are maintenance-free regarding moving parts.

Ready to implement 3D LiDAR in your fleet?

Upgrade your AGV's perception capabilities today.

Explore Our Robots