Educational Research Platform

Accelerate academic discovery and technical validation with a modular robotic ecosystem designed for data integrity. This platform bridges the gap between theoretical algorithms and physical world interactions, enabling reproducible experiments for students and researchers alike.

Why Automate Educational Research?

Cost-Effective Scalability

Reduce the overhead of physical experiments. Deploy one platform to run hundreds of simulation-to-reality tests without needing specialized setups for every student.

Reproducible Data

Eliminate human error in data collection. Robots follow precise trajectories and sensor protocols, ensuring every dataset is consistent and comparable.

Modular Hardware

Swap sensors and manipulators in minutes. Support diverse research fields—from computer vision to environmental monitoring—on a single chassis.

ROS 2 Native

Built on industry-standard open-source middleware. Students learn the actual tools used in commercial robotics, from navigation stacks to MoveIt.

Remote Labs

Enable remote access to physical hardware. Researchers can deploy code to the robot from anywhere, facilitating international collaboration.

Publication Ready

Generate high-fidelity logs and visualizations automatically. The platform outputs data in standard formats (CSV, Rosbag) ready for analysis papers.

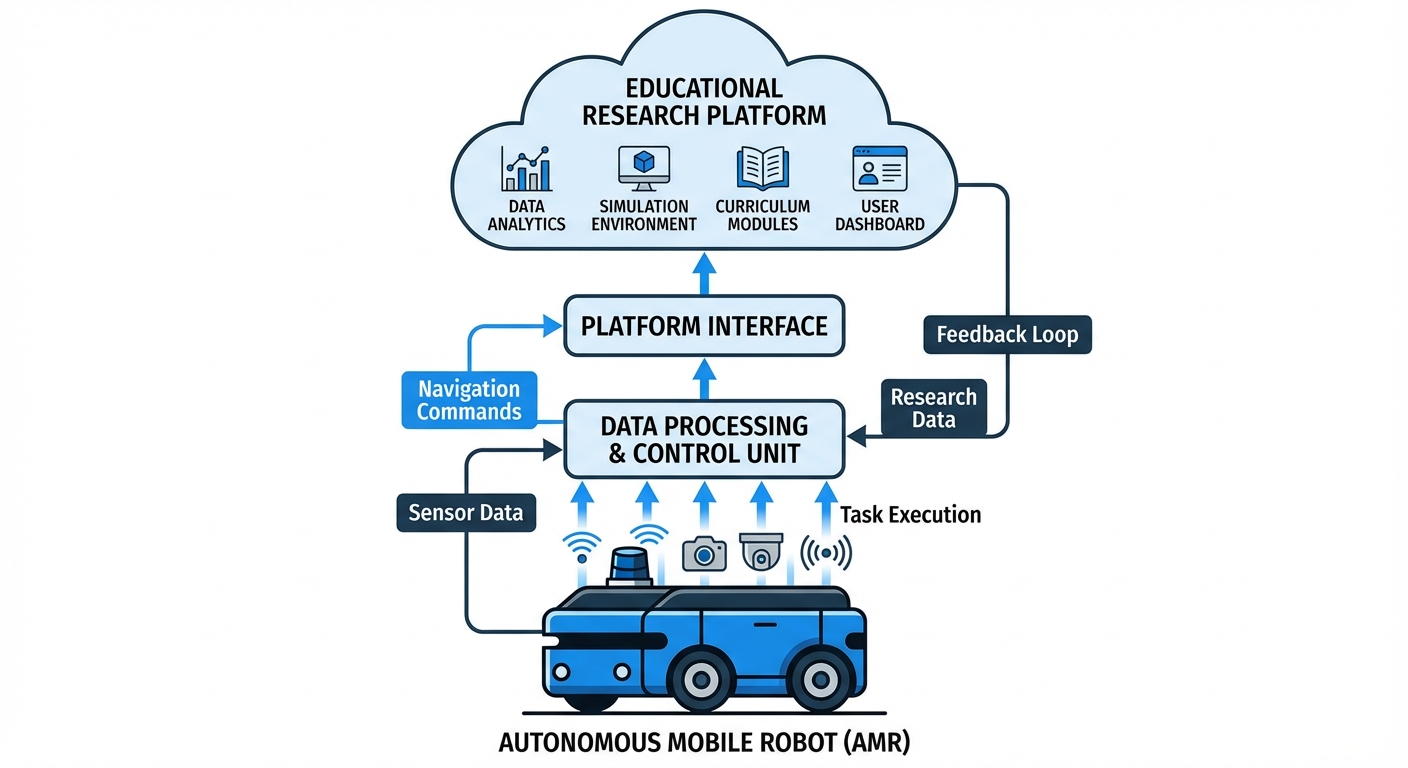

Architecture & Workflow

The Educational Research Platform operates on a tiered architecture designed to separate low-level motor control from high-level cognitive tasks. This allows researchers to focus on algorithm development without worrying about hardware drivers.

1. Sensing & Perception: The robot aggregates data from LiDAR, depth cameras, and IMUs. This raw stream is timestamped and synchronized on the edge device.

2. On-board Processing: Real-time SLAM (Simultaneous Localization and Mapping) and path planning occur locally on the robot's GPU, ensuring safety and responsiveness even without internet connectivity.

3. Data Telemetry: While operating, the robot streams selectable topics to a central research server or dashboard, allowing for real-time visualization of the robot's "thought process" and decision trees.

Where It's Used

Computer Science Departments

Used in graduate-level robotics courses to validate ML models and teach path planning algorithms in dynamic, real-world corridors rather than just simulation.

Agricultural Tech Research

Outfitted with multispectral cameras to navigate crop rows, collecting phenotype data and testing autonomous harvesting logic on a small scale.

Psychology & HRI

Human-Robot Interaction (HRI) studies utilizing the platform to measure human responses to robot gestures, proximity, and navigation behaviors in social spaces.

Civil Engineering

Deploying autonomous rovers to map indoor environments and generate Building Information Models (BIM) or detect structural anomalies via thermal imaging.

What You Need

- Robot Base Differential drive or Mecanum chassis with encoders

- Compute Unit NVIDIA Jetson Orin / Raspberry Pi 5 (min 8GB RAM)

- Sensors 2D/3D LiDAR, RGB-D Camera, 9-axis IMU

- Software Stack Ubuntu 22.04 LTS, ROS 2 Humble/Iron

- Connectivity Dual-band Wi-Fi 6 for telemetry, Optional 5G module