Sim-to-Real Transfer

Close the 'Reality Gap' by training AGVs in super-realistic sims before real-world rollout. It boosts safety, saves hardware, and speeds up mastering tough navigation.

Core Concepts

The Reality Gap

Tiny mismatches between sim physics and reality (friction, noise, delays) that tank sim-trained bots on real hardware.

Domain Randomization

Shake up sim settings on purpose (colors, lights, friction) so the real world feels like just another sim variant to the robot.

System Identification

Fine-tune the sim's math model to mirror your real AGV – mass balance, wheel slip, motor curves, all of it.

Visual Adaptation

Leverage GANs (Generative Adversarial Networks) to morph fake sim images into lifelike video for vision nav.

Sensor Noise Injection

Sprinkle random noise into sim LIDAR and odometry so the robot doesn't bank on perfect data that real life never delivers.

Zero-Shot Transfer

Zero-shot success: a sim-only trained policy crushing it first try in reality, no tweaks needed.

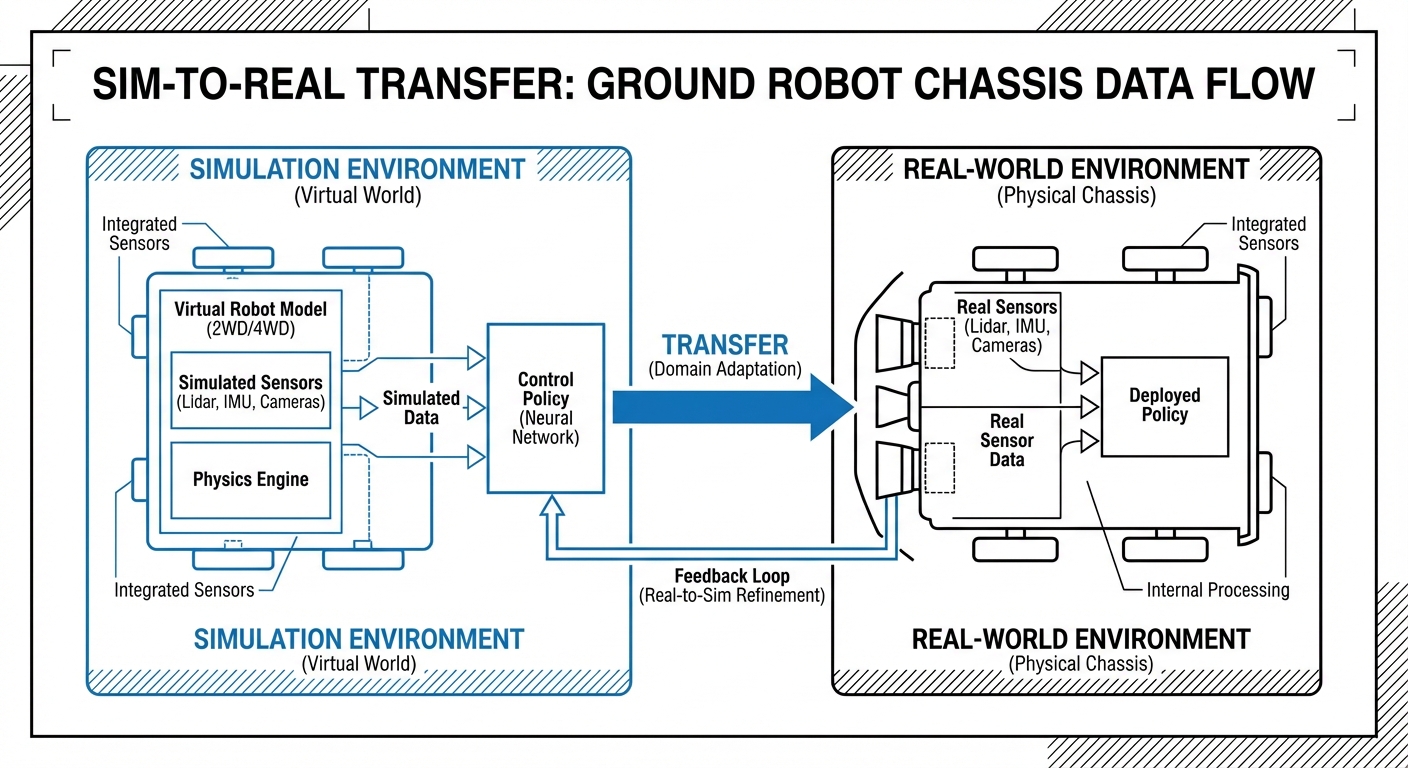

How It Works

The Sim-to-Real flow kicks off with a 'Digital Twin' – a spot-on virtual clone of your AGV and its world, like a warehouse floor.

In there, RL agents pilot the robot through millions of runs per hour. Randomize everything wildly (friction, obstacle weights, lights) to build tough, adaptable smarts instead of map-cheating.

Policy ready? Rip out the neural net and load it on the real AGV's computer. Since sim threw worse curveballs, real life feels easy – smooth, confident runs.

Real-World Applications

High-Speed Logistics

Train AGVs to hug high-speed warehouse corners without flipping. Sim lets you push deadly limits safely.

Unstructured Environments

Gear up robots for chaotic spots like construction sites or hospitals with shifting crowds. Sim-to-Real nails those wild edge cases.

Outdoor Terrain Navigation

Getting delivery robots ready for all kinds of pavement textures, slippery wet surfaces, and bumpy terrain by simulating millions of friction interactions.

Multi-Agent Swarms

Managing fleets of hundreds of robots at once. Simulation is the only realistic way to train traffic management protocols and avoid deadlocks before rolling out a real fleet.

Frequently Asked Questions

Why not just train robots in the real world?

Training straight on hardware is slow, super expensive, and downright risky. Reinforcement learning needs millions of trial-and-error runs; in the physical world, that could take years and trash your robots with collisions while they're still figuring things out.

What's the "Reality Gap," and why is it such a headache?

The Reality Gap is all about the mismatches between simulation and the real world. If your sim doesn't capture things like motor backlash, friction, or sensor noise perfectly, the robot might learn tricks that exploit sim glitches—and then totally flop on actual hardware.

Does Sim-to-Real transfer require specific hardware on the AGV?

Generally, no special actuators are needed, but your onboard computer (like an NVIDIA Jetson or similar IPC) has to be beefy enough for real-time neural network inference. And the real-world sensors (LIDAR, cameras) must match exactly what you've modeled in the sim.

What simulators are commonly used for AGVs?

Top high-fidelity simulators? Think NVIDIA Isaac Sim, Gazebo, MuJoCo, and PyBullet. Isaac Sim is blowing up in industrial robotics thanks to its photorealistic rendering and GPU-powered physics that slash training times.

How does Domain Randomization help?

By shaking up parameters like floor friction, object masses, and lighting in the sim, the AI quits depending on quirky environmental hints. Instead, it picks up a 'robust' policy that sees the real world as just one more sim variation—boosting transfer success big time.

Is Sim-to-Real used for SLAM (Simultaneous Localization and Mapping)?

Yep, it's getting popular for fine-tuning SLAM algorithms. Devs can test loop-closure and relocalization in tough spots like endless corridors or bustling warehouses, all without building those setups for real.

What is "Zero-Shot" transfer?

Zero-shot transfer is when a sim-trained model jumps straight to the physical robot and works perfectly—no extra training or tweaks needed. It's the holy grail of sim-to-real work, saving tons of deployment time.

How do you model sensor noise effectively?

We spike the simulated sensors with Gaussian noise, drift, and dropouts. For instance, we might randomly zap 5% of LIDAR points or blur camera feeds to make sure the robot navigates safely even with glitchy data.

Can Sim-to-Real handle complex interaction tasks?

Absolutely—beyond basic navigation, it's key for mobile manipulation (like AGVs with arms). Robots learn to open doors, push carts, or handle different loads by simulating the real contact physics.

How accurate does the "Digital Twin" need to be?

Surprisingly, it doesn't have to look picture-perfect; it just needs solid physics. With heavy domain randomization, a robot can train in a rough, blocky sim environment and still crush it in reality—as long as collisions and masses are modeled right.

What are the cost benefits of this approach?

Yeah, there's upfront work to build the sim environment, but the long-term wins are huge. No more smashed prototypes, smaller testing spaces, and you can train thousands of virtual robots 24/7 in parallel.

How do we verify safety before real-world deployment?

We use a 'Safety Shield' setup for verification. Even if the neural net spits out a command, a hardcoded safety layer (built on collision-avoidance kinematics) double-checks it. Plus, we stress-test the policy in worst-case sim scenarios before going to hardware.